About the runtime engines

Nuance Mix offers several engines, each with their own API, to help you build and run your Mix applications. It includes engines for recognition, voice synthesis, NLU, and dialog.

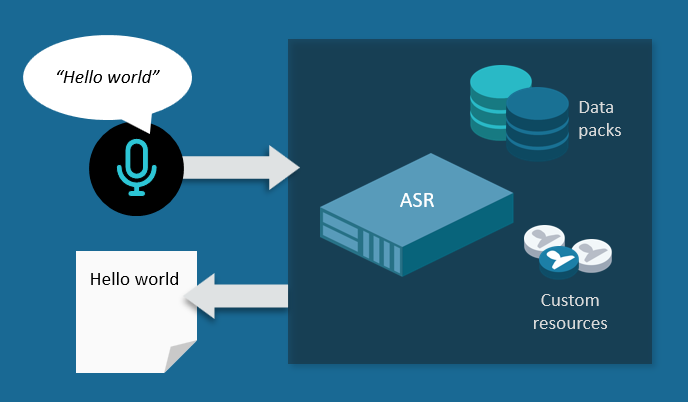

ASRaaS recognition

ASR as a Service is a Nuance recognition engine that transcribes speech into text in real time. It receives a stream of audio speech and streams back a range of text output, from a simple text transcript to a search-optimized lattice of information.

You can choose to receive the best hypothesis of the final result, or partial results that ASRaaS transmits continually as it recognizes the audio. ASRaaS formats the results using the basic rules for each language, and you may add your own formatting rules.

ASRaaS works with Nuance data packs to provide the underlying transcription functionality for many languages. Based on neural networks, ASRaaS data packs include acoustic and language models.

You may customize the language model in the data pack using resources such as domain language models (DLMs) and wordsets.

-

A DLM extends the language model for a specific environment. You build DLMs in Mix.nlu using sentences from your environment and may include entities, or collections of specific terms.

-

Wordsets are another way to improve and specialize recognition. In ASRaaS, a wordset is a list of words or phrases that extends the terms in the data pack or a DLM. You may use simple source wordsets or compile them ahead of time for better performance at runtime.

ASRaaS can be used on its own to record conversations and produce transcripts of events, but it’s often used in conjunction with NLUaaS to generate semantic understanding. ASRaaS transcribes the words spoken by the user and then passes the text to NLUaaS, which extracts the underlying meaning using its semantic engine.

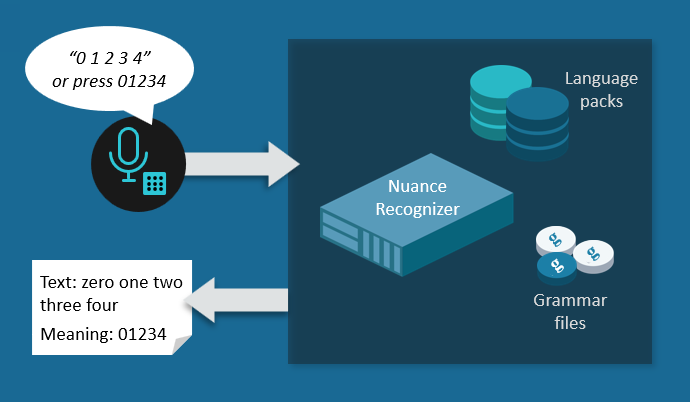

NRaaS recognition

Nuance Recognizer as a Service is a Nuance speech recognition service for constrained grammar-based vocabularies that allow natural speech in response to open-ended questions. The service understands and interprets spoken and touchtone input to help deliver seamless self-service.

Constrained grammar recognition engines such as NRaaS are especially useful for:

- Recognizing alphanumeric inputs such as account numbers and tracking numbers.

- Small scoped applications that are designed for interactions with a small set of words and phrases.

- Directed dialog to assist in navigating a menu tree of items, either used for the first interaction of a caller, or as the basis of the system’s conversational design.

Using a grammar means that NRaaS performs both word recognition and semantic understanding. Unlike ASRaaS, it returns not only the words spoken by the user but also their underlying meaning as defined in the grammar.

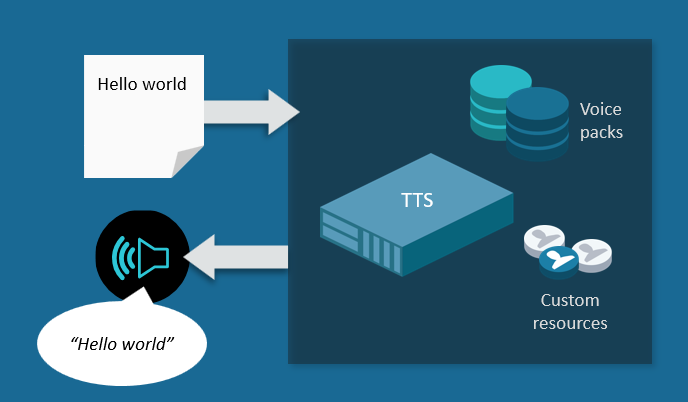

TTSaaS voice synthesis

TTS as a Service is a text-to-speech engine powered by Nuance Vocalizer for Cloud (NVC) and Nuance Vocalizer for Enterprise (NVE). It works with Nuance voice packs to synthesize speech from plain text, from Speech Science Markup Language (SSML), or from Nuance control codes.

TTSaaS receives input as text, SSML, or a combination of text and Nuance control codes. It returns synthesized speech as an audio stream or a single audio package. You choose the Nuance voice to render the speech from hundreds of voices available in different genders, languages, and locales.

Apart from the mandatory voice resource, you may also include synthesis resources such as user dictionaries, ActivePrompt databases, rulesets, and prerecorded audio files.

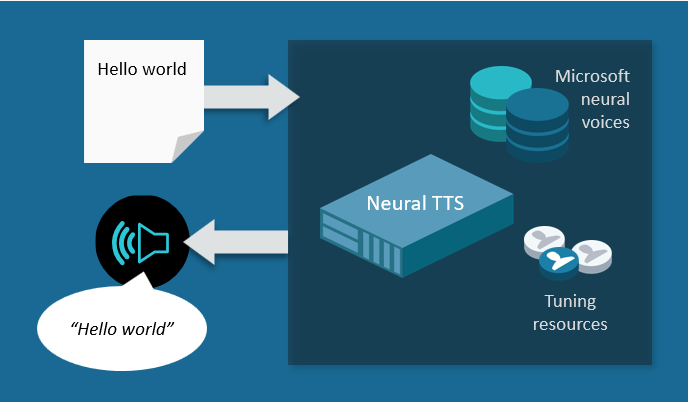

Neural TTSaaS voice synthesis

Neural TTS as a Service offers text-to-speech services powered by Nuance Vocalizer for Cloud and the Text-to-Speech feature of Microsoft Azure Cognitive Services for Speech. Working with Microsoft neural voices, Neural TTSaaS synthesizes speech from plain text or SSML input.

Neural TTSaaS accepts text or SSML input and returns an audio stream of synthesized speech. You choose the Microsoft neural voice to render the speech from hundreds of available voices.

You may include synthesis resources such as custom lexicons and recorded audio prompts created using Microsoft utilities.

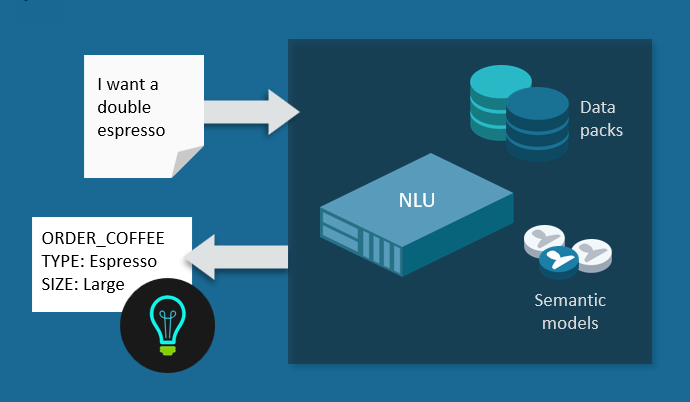

NLUaaS understanding

NLU as a Service is a Nuance semantic understanding engine that provides an interpretation of the user’s input in terms of an intent. An intent is something the user wants to do.

NLUaaS works with a data pack for many languages and locales, and a semantic language model customized for your application. It can also benefit from interpretation aids such as additional language models and wordsets to improve understanding in specific environments or businesses.

You build semantic language models, or natural language understanding (NLU) models, in Mix.nlu. As described in NLU, you use Mix.nlu to input sample sentences that are representative of what your users say, and then annotate them to show how the sentences map to intended actions.

You may also extend your semantic model with wordsets containing additional terms used in your environment. As in ASRaaS, you may use source wordsets or compile them ahead of time for faster runtime performance.

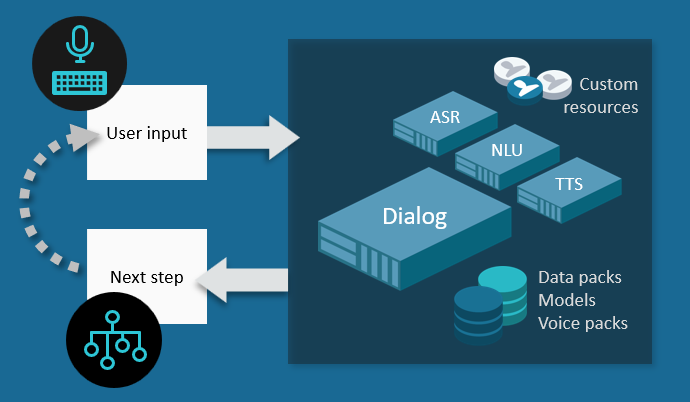

DLGaaS conversation

Dialog as a Service is a Nuance conversation engine that orchestrates the dialog between an end user and a natural language agent, conversing either in text or by speech. It simulates a real-life exchange between two people, providing choices at each step and remembering the ongoing context of the conversation.

DLGaaS works with a dialog model created with the Mix.dialog tool and a semantic model created with Mix.nlu. For speech conversations, it also calls ASRaaS for speech recognition and TTSaaS or Neural TTSaaS for voice synthesis. As described in Dialog, you build the Dialog model on top of the semantic NLU model by defining what should happen at each step of the conversation given the user’s intent.

Feedback

Was this page helpful?

Glad to hear it! Please tell us how we can improve.

Sorry to hear that. Please tell us how we can improve.