Message actions

A message action is triggered by a message node and contains a message to be played to the user. Message contents can be provided for playing messages to the user in one or more modalities as configured in Mix.dialog:

- Text and SSML that can be rendered using Text-to-speech: The

nlgfield provides backup text as a fallback for speech outputs synthesized using TTSaaS. This is useful if you want to perform your own orchestration with TTSaaS. For more information about how to generate TTSaaS speech audio, see Generating synthesized speech output. Note that the TTS audio for the message is not with the message. It is provided in theaudiofield of the StreamOutput messages. - Text to be visually displayed to the user: The

visualfield provides text that can be displayed, for example, in a chat or in a web application. This field supports rich text format, so you can include HTML markups, URLs, etc. - Recorded audio file to play to the user: The

audiofield of a message provides a path to a recorded audio file in the client app files that can be played to the end user. Theurifield provides a local path in the client application to the file, while thetextfield provides text that can be used as backup TTS if the audio file is missing or cannot be played.

Generally, only one of nlg or audio will have contents but not both.

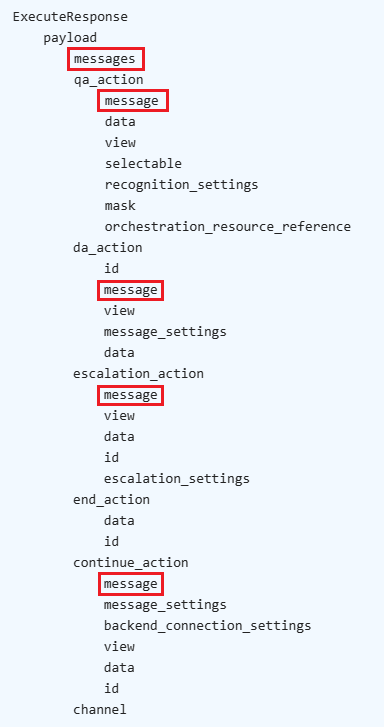

The payload of an ExecuteResponse can include standalone messages, as well as messages as part of other action types.

Here is an example of a payload with both standalone messages as well as a message action as part of a QA Action:

{

"payload": {

"messages": [{

"nlg": [{

"text": "Hey there! Welcome to the coffee app."

}],

"visual": [{

"text": "Hey there! Welcome to the coffee app."

}],

"audio": []

}],

"qa_action": {

"message": {

"nlg": [{

"text": "What can I do for you today?"

}],

"visual": [{

"text": "What can I do for you today?"

}

],

"audio": []

}

}

}

}

TTS audio and messages

If Dialog-orchestrated TTSaaS speech generation was requested by the client in a previous StreamInput message, generated TTS audio is provided in the audio field of StreamOutput messages rather than in the message action. The audio generated contains speech for a concatenation of the text of all the messages in the response. The first StreamOutput message includes an ExecuteResponse message that contains message actions. The nlg fields of these message actions contain backup text and SSML as described above.

Also related to TTS, each message includes a tts_parameters. This field contains TTS settings to use when orchestrating with TTS on the client-side.

Prerecorded audio and messages

If your application uses prerecorded audio, references to audio files appear in each of the messages within either message actions or other actions. Audio files can be retrieved and played in sequence. For details, see Providing speech response using recorded speech audio.

Configuring messages

Message actions can be configured within the following Mix.dialog node types:

-

Message node: In this case they are returned in the

messagesfield of the ExecuteRequestPayload. Messages specified in a message node are returned only when a question and answer, data access, or external actions node occurs in the dialog flow. -

Question and answer node: In this case they are returned in the message field of the ExecuteRequestPayload qa_action

-

Data access node: A latency message can be defined to play while the user is waiting for a data transfer to take place, whether client-side or server-side. In these cases the message is returned in the message field of the ExecuteRequestPayload da_action or continue_action respectively.

-

External actions node, transfer type: A message can be defined to play to confirm to the user that they are about to be transfered. In this case the message is returned in the message field of the ExecuteRequestPayload escalation_action.

Sending messages in response

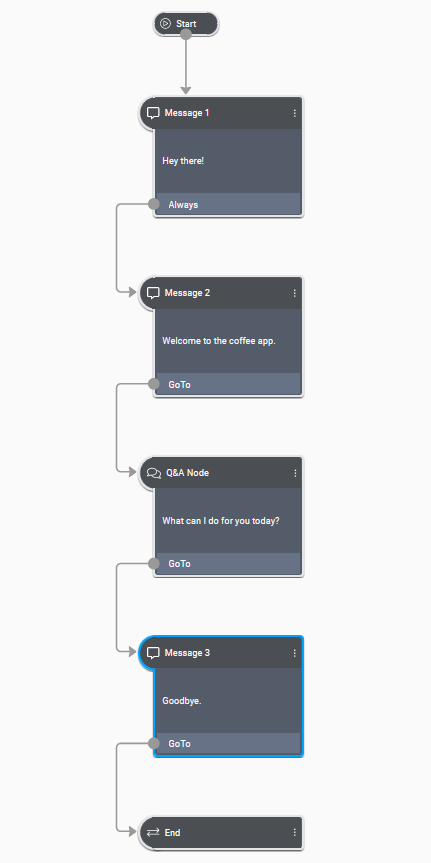

Configured messages within successive nodes are accumulated and sent only when a question and answer node, a data access node, or an external actions node occurs in the dialog flow. For example, consider the following dialog flow:

This would be handled as follows:

Step 1: The Dialog service sends an ExecuteResponse when encountering the question and answer node, with the following messages:

# First ExecuteResponse

{

"payload": {

"messages": [{

"nlg": [],

"visual": [{

"text": "Hey there!"

}

],

"audio": []

}, {

"nlg": [],

"visual": [{

"text": "Welcome to the coffee app."

}

],

"audio": []

}

],

"qa_action": {

"message": {

"nlg": [],

"visual": [{

"text": "What can I do for you today?"

}

],

"audio": []

}

}

}

}

Step 2: The client application plays the messages content to the user in sequence in the specified modalities. In this example, visual text is specified, so the messages are displayed to the user as text, for example in a chat window. The client plays the two message node messages first, and then plays the message enclosed in the other node type—in this case, a question and answer node.

Step3: The client app collects user input and sends an ExecuteRequest with the user input.

Step 4: The Dialog service sends an ExecuteResponse when encountering the end node, with the following message action:

# Second ExecuteResponse

{

"payload": {

"messages": [{

"nlg": [],

"visual": [{

"text": "Goodbye."

}

],

"audio": []

}

],

"end_action": {}

}

}

Using variables in messages

Messages can include variables. For example, in a coffee application, you might want to personalize the greeting message:

“Hello Miranda ! What can I do for you today?”

Variables in messages are configured in Mix.dialog. The variables are resolved at runtime by the Dialog engine and then filled in as part of the message contents returned to the client application.

For example:

{

"payload": {

"messages": [],

"qa_action": {

"message": {

"nlg": [],

"visual": [

{

"text": "Hello Miranda ! What can I do for you today?"

}

],

"audio": []

}

}

}

}

Feedback

Was this page helpful?

Glad to hear it! Please tell us how we can improve.

Sorry to hear that. Please tell us how we can improve.