Setting categories

The Project Settings panel is organized into categories. Many categories are available at every level (see Setting scopes). Detailed information appears on the panel itself, to help you understand the available settings.

Most settings have a global default value, for which you can configure overrides at various levels (see Setting scopes for more information on how settings are inherited from one level to another). A Reset icon ![]() identifies setting overrides. Click this icon to revert any modified setting to the applicable higher-level default.

identifies setting overrides. Click this icon to revert any modified setting to the applicable higher-level default.

Answers with Copilot

Note:

Answers and Builder are in preview. For more information, see Copilot in Mix (preview).Specify a content source and enable Answers, a Copilot feature, in Mix.

The content source must use the HTTPS protocol (the URL must start with “https://”). It must be a publicly accessible website. Make sure Bing can find and index this website, and that the information this site provides is accurate and up to date. Don’t use sites with forums or comments from end users: this can reduce the relevancy of answers. Don’t include query strings, more than two levels of depth, special characters, or the character " in the URL.

Conversation settings

Set how many times the application will try to collect the same piece of information (intent or entity) before giving up.

Collection settings

Set the low-confidence threshold, below which the application rejects a collected utterance and throws a nomatch event, the high-confidence threshold above which it is not necessary for the application to prompt for confirmation, the number of nomatch event before the application throws a maxnomatch event, and how many times the application will try to collect the same piece of information after failing to detect any response from the user.

For speech applications, you can set timeout parameters such as how long to wait for speech once a prompt has finished playing before throwing a noinput event. Refer to sections 2.3.6. record element and 6.3.2 Generic Speech Recognizer Properties for more information on these VoiceXML properties.

You can also choose whether the initial message is to be used, or not, after nomatch and noinput recovery messages at question and answer nodes.

You can set different high- and low-confidence thresholds separately, for each entity in your project.

In a multilingual project, you can set different confidence thresholds separately, for each language.

In projects created with Answers, by default, low-confidence thresholds are higher by default than in projects that don’t use Answers, or where Answers was added after the project was created. This low-confidence threshold (that is, the score below which the application will fall back to Answers) is momentarily set to 0.5, but you might observe a different value in projects you’ll create later on, during the preview period. Experiment with different confidence-threshold values (for both low- and high-confidence thresholds) in your project, until you’re satisfied with its behavior.

Note:

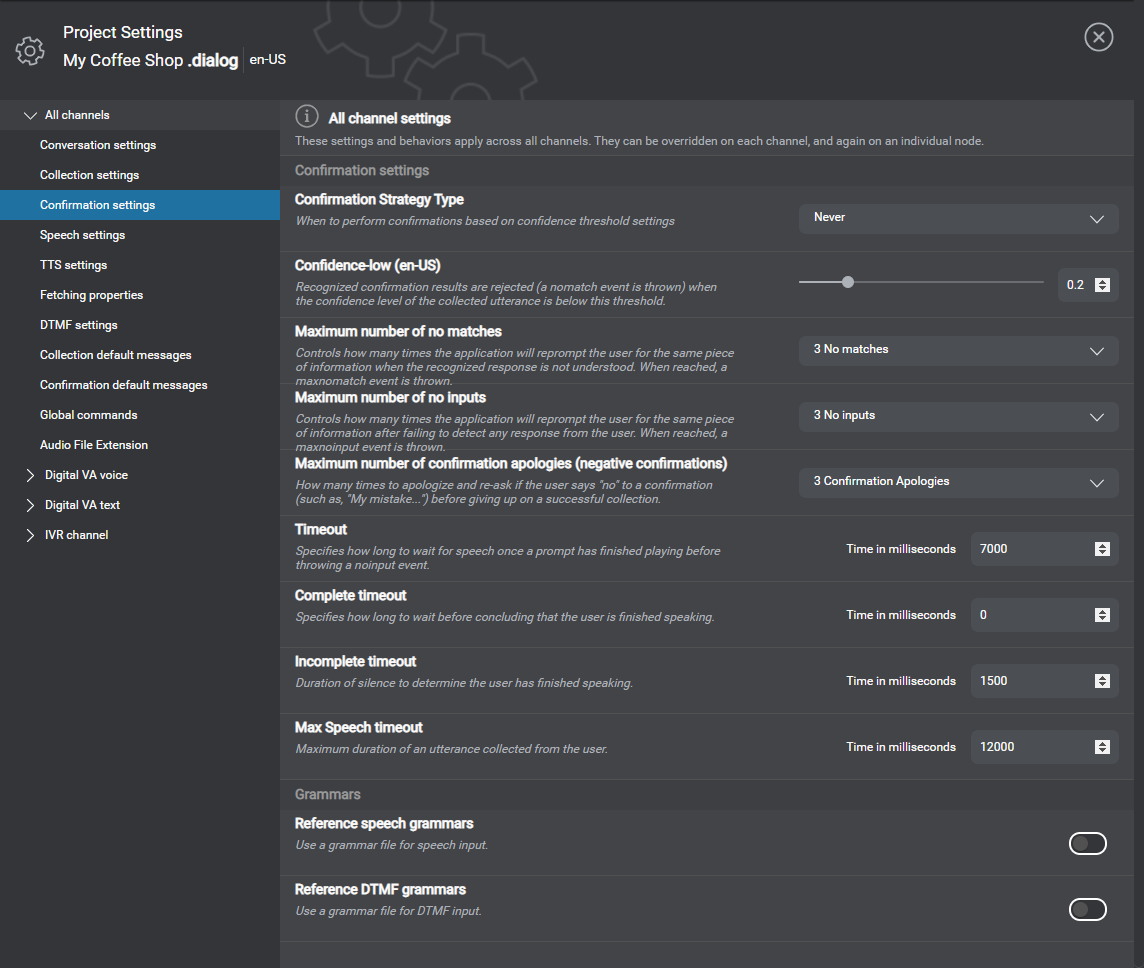

Answers and Builder are in preview. For more information, see Copilot in Mix (preview).Confirmation settings

Set the confirmation strategy for entities, including the low-confidence threshold below which the application rejects a collected utterance at the confirmation step and throws a nomatch event, and how many times the application will try to collect the same piece of information after the user responds negatively to the confirmation question.

In multilingual projects, you can set different low-confidence thresholds separately, for each language.

In projects meant to support a VoiceXML application, you can specify GrXML grammars—available at the project level only—for speech and DTMF confirmation interactions. When specified, confirmation grammars apply to all channels.

Speech settings

Set the desired recognition speed and sensitivity, the weight for the ASR domain language model, and the default barge-in type (speech vs. hotword).

Barge-in is enabled by default, and can be disabled for individual messages in the Messages resource panel, or at the node level (in the speech settings of a question and answer node, or in the settings of a message node). Barge-in handling depends on the recognizer used in the environment where the application is to be deployed. Refer to section 4.1.5 Bargein of the VoiceXML specification for more information.

TTS settings

No default values. Only available in projects with channels that support the TTS modality.

Choose the desired voice per language, including gender and quality, for the text-to-speech engine.

Fetching properties

Available at the global (all channels) level, and at the node level for data access nodes.

Set how long to wait before delivering a latency message when a data access request is pending (fetch delay, default is 500 ms), and the minimum time to play the message once started (fetch minimum, default is 0 ms, applies to audio messages only).

DTMF settings

Only available in projects with channels that support DTMF input.

Set the maximum time allowed between each DTMF character entered by the user (interdigit timeout, default is 3000 ms), the delay before assuming DTMF input is finished once the grammar has reached a termination point and won’t allow additional DTMF input other than the terminating character, if any (terminating timeout, default is 2000 ms), and the character that terminates DTMF input (terminating character, optional, maximum 1 character, default is #). Refer to Appendix D. Timing Properties

for more information on these VoiceXML properties.

Note:

With the current version of Mix.dialog, the option to disable barge-in for a question and answer node is in the Speech settings category.Grammars

Only available at the channel level.

Specify, for each channel, whether to allow referencing external speech or DTMF grammars in question and answer nodes.

Collection default messages

Add default messages to handle situations when your application fails to recognize the user’s utterance, when it fails to detect any utterance from the user, and when it reaches the maximum number of turns, nomatch events, or noinput events.

Confirmation default messages

Add default messages to handle confirmation for entities, including recovery behaviors at the confirmation step. Creating confirmation default messages directly from the Project Settings panel allows you to reference the entity being collected in a generic way by using the Current Entity Value predefined variable.

Note:

The predefined variable Current Entity Value cannot be marked as sensitive, and therefore would never be masked in message event logs. If sensitive data is likely to be presented in confirmation messages, make sure to configure local confirmation messages, at every question and answer node that is set up to collect a sensitive entity.Global commands

Only available at the global (all channels) level.

Global commands are utterances the user can use at any time and which immediately invoke an associated action; for example: main menu, operator, goodbye. Enable the commands you want to support and add new ones if desired.

In projects meant to support a VoiceXML application, you can specify GrXML grammars for speech and DTMF command actions.

Audio file extension

Only available in projects with channels that support the Audio Script modality.

Extension to append to audio file IDs when exporting the list of messages: .wav (default), .vox, or .ulaw.

Entities settings

Only available at the channel level.

Set a confirmation strategy for specific entities (predefined and custom), confirmation default messages, and other applicable settings in the collection, speech, TTS, and DTMF setting categories.

Data privacy

Self-hosted environments: This setting category requires engine pack 2.1 (or later) for Speech Suite deployments; engine pack 3.9 (or later) for self-hosted Mix deployments.

Only available at the node level for question and answer nodes.

Marking a question and answer node as sensitive prevents all user input collected at this node from being exposed in application logs. At runtime, anything found in the interpretation results for input collected at a sensitive question and answer node will be masked in the logs (user text, utterance, intent and entity values and literals). This applies to NLU intent and entity collection, as well as to all events at that node: collection, recovery, confirmation, nomatch, noinput, max events, NO_INTENT, intent switching, and so on.

For example, in the case of a question and answer node collecting a freeform entity, marking the node itself as sensitive will prevent the nomatch literal returned by the NLU service from being exposed in the logs.

Note:

If the entity meant to be collected at a question and answer node that is marked as sensitive is likely to be used in dynamic messages or exchanged with an external system at runtime, make sure to also mark the entity itself as sensitive, to ensure that it will be masked in all dialog event logs.Feedback

Was this page helpful?

Glad to hear it! Please tell us how we can improve.

Sorry to hear that. Please tell us how we can improve.