Question and answer actions

A question and answer action is returned by a question and answer node. A question and answer node is the basic node type in dialog applications. It first provides a message prompting the user to provide input and then accepts user input. By orchestrating with Mix platform services it can determine the intent and/or entities expressed in the user input. A question and answer can be either an initial question seeking the user intent or a follow-up question to collect an entity in relation to a question router node.

The message specified in a question and answer node is sent to the client application as a message action within a question and answer action. The message is to be played to the user by the client application via one of the configured output modalities.

The client application must then collect user input and return it to the question and answer node. The current language for the interaction is indicated in the message under the language field. The allowed input modes meanwhile are indicated in the QAAction under recognition_settings.

The collected input can be sent back to the Dialog service in one of five ways (depending on the input modalities defined in the present channel):

- As text to be interpreted by Nuance using NLUaaS. In this case, the client application returns the input string to the dialog application. See Interpreting text user input for details.

- As speech audio to be recognized and then interpreted by Nuance. This is implemented in the client app through the StreamInput method. See Handling speech input via DLGaaS for details.

- As NLU interpretation results. This assumes that interpretation of the user input is performed by an external system. In this case, the client application is responsible for returning the results of the interpretation to the dialog application, in the expected message format, and expressed in terms of intents and entities defined in your Mix project. See Handling user input externally for details.

- As ASR recognition results. This assumes that speech recognition is performed by an external system. In this case, the client application is responsible for returning the results of the recognition to the dialog application, in the expected message format. See Handling user input externally for details.

- As a selected option from an interactive element.

When a question and answer node is encountered, the dialog flow is stopped until the client application has returned the user input.

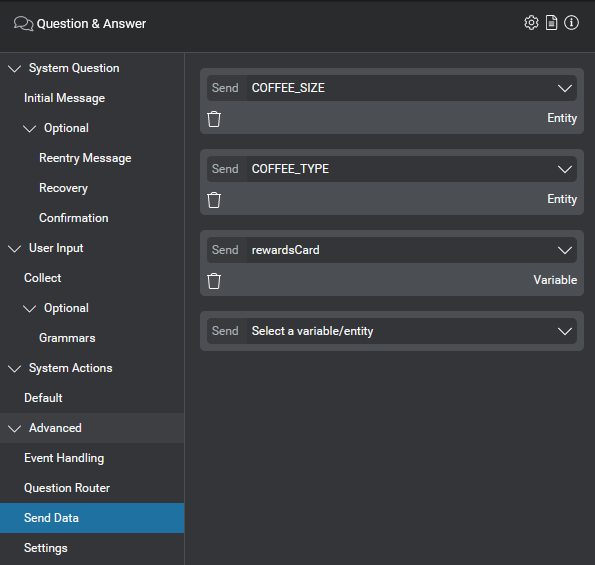

Sending data

A question and answer node can specify data to send to the client application. This data is configured in Mix.dialog, in the Send Data tab of the question and answer node. For the procedure, see Send data to the client application in the Mix.dialog documentation.

For example, in the coffee application, you might want to send entity values that you have collected in a previous node (for example, COFFEE_TYPE and COFFEE_SIZE) as well as data that you have retrieved from an external system (for example, the user’s rewards card number):

This data could be useful, for example, to update a customer’s order history on the client app. This data is sent to the client application in the data field of the qa_action; for example:

{

"payload": {

"messages": [],

"qa_action": {

"message": {

"nlg": [],

"visual": [

{

"text": "Your order was processed. Would you like anything else today?"

}

],

"audio": [],

"view": {

"id": "",

"name": ""

}

},

"data": {

"rewardsCard": "5367871902680912",

"COFFEE_TYPE": "espresso",

"COFFEE_SIZE": "lg"

}

}

}

}

Interactive elements

Question and answer actions can include information to define interactive input elements to be displayed by the client app in an interactive text chat application. This information is sent to the client app in the selectable field of the qa_action. Interactive elements are configured in Mix.dialog within question and answer nodes.

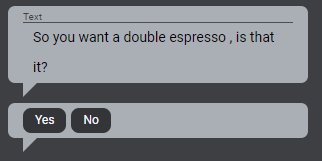

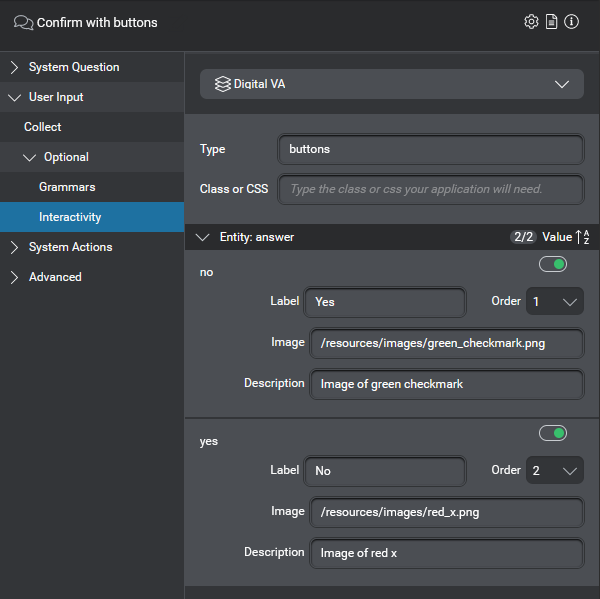

For example, in a web version of the coffee application, you may want to display Yes/No buttons so that users can confirm their selection for an entity named “answer” which takes values of Yes or No:

This is useful for question and answer nodes associated with a question router node used to collect a value for an entity defined in the Mix NLU model. For the procedure to define an interactive element in a question and answer node, see Define interactive elements in the Mix.dialog documentation.

For example, for the Yes/No buttons scenario above, you could configure two elements, one for each button, as follows:

For information on how to handle interactive input in a client app, see Handling interactive input.

Resources for interpreting user input

Question and answer actions include settings and resources to help interpret user input when this is orchestrated on the client side rather than by Dialog.

This includes:

For more details see Resources for external orchestration.

Feedback

Was this page helpful?

Glad to hear it! Please tell us how we can improve.

Sorry to hear that. Please tell us how we can improve.