Dialog essentials

From an end-user’s perspective, a dialog-enabled app is one that understands natural language, can respond in kind, and, where appropriate, can extend the conversation by following up the user’s turn with appropriate questions and suggestions, all the while maintaining a memory of the context of what happened earlier in the conversation.

Mix dialog projects are created using the Mix.dialog web tool. Using Mix tooling, these projects are built and deployed as applications on the Mix platform. This document describes how to access a Mix dialog application at runtime from a client application using the Dialog gRPC API.

This overview introduces useful concepts that will help you understand to write your client application.

What is a conversation?

The flow of the Dialog API is based around the metaphor of a conversation between two parties. Specifically, with Mix Dialog, this is a conversation between one human user—who enters text and speech inputs through some sort of client app UI—and a Dialog agent running on a server. Specifically, the API allows an interface between a client app and the Dialog agent. The model here is a conversation between a person and an agent from an organization or company that a person might want to contact.

Similar to a person dealing with a human agent, the human user is assumed to have some purpose in the conversation. They will come to the conversation with an intent, and the goal of the agent is to help understand that intent and help the person achieve it. The person might also introduce a new intent during the conversation.

To understand how the flow of the API works, it helps to reflect for a moment on what a conversation is. In its simplest form, a conversation is a series of more or less realtime exchanges between two people over a period of time. People take turns speaking, and communicate with each other in a back-and-forth pattern.

Structure of a conversation

Taken a little abstractly, a conversation between a user and an agent could look like this:

- Some formalities at the start to establish communications, agree to have a conversation, and start keeping track of the conversation.

- Once the formalities are done, the user signals to the agent a desire to begin, and the agent replies to start off the conversation.

- They continue through a few rounds of back and forth where the user says something or provides some requested data, the Dialog agent processes this, and responds to carry on the dialog flow.

- This process continues until either the user or the Dialog agent ends the conversation.

What this looks like in the API

In the Dialog client runtime API, you use a Start request to establish a conversation. This creates a session on the Dialog side to hold the conversation and any resources it needs for a set timeframe.

The dialog proceeds in a series of steps, where at each step, the client app sends either an interpretation of user intent (carried out by a non-Mix tool), or raw input from the user (audio or text), as well as possibly data. In the case of raw user input, Dialog calls on Mix services to infer the intent of the user input. The Dialog agent advances the flow of the dialog based on the inferred user intent and then responds by sending informational messages, prompts for input, references to files for the client to use or play, or requests to the client for data. The way this works depends on what the client application sends to Dialog:

- Text input

- Audio input

- Requested data

- Prompt to continue with flow of server-side data transfer

- Event related to issues with external input handling

Note that the flow of the API is structured around steps of user input, followed by the agent response. It is true that the agent’s response at any step is a reply to the client input in the same step. But remember that in conversations with some sort of agent, be it human or virtual, the agent generally drives or steers the conversation. For example, “Welcome to our store. How may I help you?” In Mix.dialog, you create a dialog flow, and the conversation is driven by this flow.

By convention, an agent will generally start off the conversation and then continue to direct the flow of the conversation toward getting any additional information needed to fulfill the request. As well, when a user gives input, it is generally in response to something asked for in the previous step of the conversation by the agent. And when data is sent in a step, it is a response to a request for data in the previous step.

At the start of the conversation, the client app needs a way to “poke” the API to reply with the initial greeting prompts, but without sending any input. The API enables you do this by sending a first Execute request with an empty payload. This causes the Dialog agent to respond with its standard initial greeting prompts, and the conversation is underway.

See Client app development for a more detailed description of how to access and use the API to carry out a conversation.

Session

A session represents an ongoing conversation between a user and the Dialog service for the purpose of carrying out some task or tasks, where the context of the conversation is maintained for the duration. For example, consider the following scenario for a coffee app:

- Service: Hello and welcome to the coffee app! What can I do for you today?

- User: I want a cappuccino.

- Service: OK, in what size would you like that?

- User: Large.

- Service: Perfect, a large cappuccino coming up!

A session is started by the client, and ends when the natural flow of the conversation is complete or the session times out.

The length of a session is flexible, and can handle different types of dialog, from a short burst of interaction to carry out one task for a user, to a series of interactions carrying out multiple tasks over an extended period of time.

Session ID

The interactions between the client application and the Dialog service for this scenario occur in the same session. A session is identified by a session ID. Each request and response exchanged between the client app and the Dialog service for that specific conversation must include that session ID referencing the conversation, and its context. If you do not provide a session ID, a new session is created and you are provided with a new session ID.

Session context

A session holds a context of the history of the conversation. This context is a memory of what the user said previously and what intents were identified previously. The context improves the performance of the dialog agent in subsequent interactions by giving additional hints to help with interpreting what the user is saying and wants to do. For example, if someone has just booked a flight to Boston, and then asks to book a hotel, it is quite likely the person wants to book a hotel in the Boston area, starting the same day as the flight arrives.

The session context is maintained throughout the lifetime of the session and added to as the conversation proceeds.

Session lifetime

The session ends when one of the following happens:

- The dialog reaches its natural, pre-defined conclusion

- The client app sends a HANGUP event after the user hangs up or disconnects

- The session times out due to inactivity

Session idle timeout limit

If the user does not respond for a long enough period of time, the session times out and ends. This is set by a configurable session idle timeout limit.

Configure session idle timeout

Depending on the type of channel used for the conversation, different idle timeout limits will make sense. For a synchronous voice call over the phone with an IVR agent, a shorter timeout limit is appropriate. For a more asynchronous chatbot, a much longer timeout limit may be appropriate. In recognition of this, the Dialog service allows you to configure the session idle timeout limit.

The session idle timeout limit is configurable up to a maximum of 259200 seconds or 72 hours. This limit can be set at the start of the dialog using the Start method. For more information on session IDs and session timeout values, see Step 3. Start conversation. If you do not specify a session timeout value, the default value of 900 seconds (15 minutes) is used.

Check remaining idle time

Using the session ID, your client application can check whether the session is still active and get an estimate of how much time is left in the session using the Status method. For more information, see Step 5. Check session status.

Reset idle time

The idle timeout limit is not a limit on the total length of the session, but on idle time without activity. Any request sent in to advance the conversation calling Execute() or ExecuteStream() resets the clock on the idle timeout.

If you simply want to reset the time remaining to keep the session alive without otherwise advancing the conversation, send an Update request specifying the session ID but with the payload left empty. For more information, see Step 6. Update session data.

Session data

Each session has memory designated to hold data related to the session. This includes contextual information about the user inputs during the session as well as session variables.

Session variables

Variables of different types can be used to hold data needed during a session. Dialog includes several useful predefined variables. You can also create new user-defined variables of various types in Mix.dialog.

For both predefined and user-defined variables, values can be assigned:

- In Mix.dialog when the dialog is defined

- Through data transfers from the client app or from external systems

Different variable types have their respective access methods defined, allowing you to retrieve variable values and components of those values in Mix.dialog. This allows you to define conditions, create dynamic messages content, and make assignments to other variables.

Assigning variables through data transfer

In some situations, you may want to send variables data from the client application to the Dialog service to be used during the session. For example, at the beginning of a session, you might want to send the geographical location of the user, the user name and phone number, and so on. You might also want to update the same values mid-session. As well, data transfers can be used during the session to provide wordsets specifying the relevant options for dynamic list entities.

Note:

You can only assign values for variables that have already been defined in Mix.dialog, whether predefined or user-defined.For more information, see Data exchange and resources.

Session data lifetime

Values for variables stored in the session persist for the lifetime of the session or until the variable is updated or cleared during the session.

User ID

It is often useful to associate an interaction with a unique user ID.

This ID can be useful for:

- General Data Protection Regulation (GDPR) compliance: Logs for a specific user can be deleted, if necessary.

- Performance tuning: User-specific voice tuning files and NLU wordsets (such as contact lists) can be saved and used to improve performance.

On most interactions with the API, the client application can provide a user_id. For details see User ID.

Nodes and actions

You create applications in Mix.dialog using nodes. Each node performs a specific task, such as asking a question, playing a message, and performing recognition. As you add nodes and connect them to one another, the dialog flow takes shape in the form of a graph.

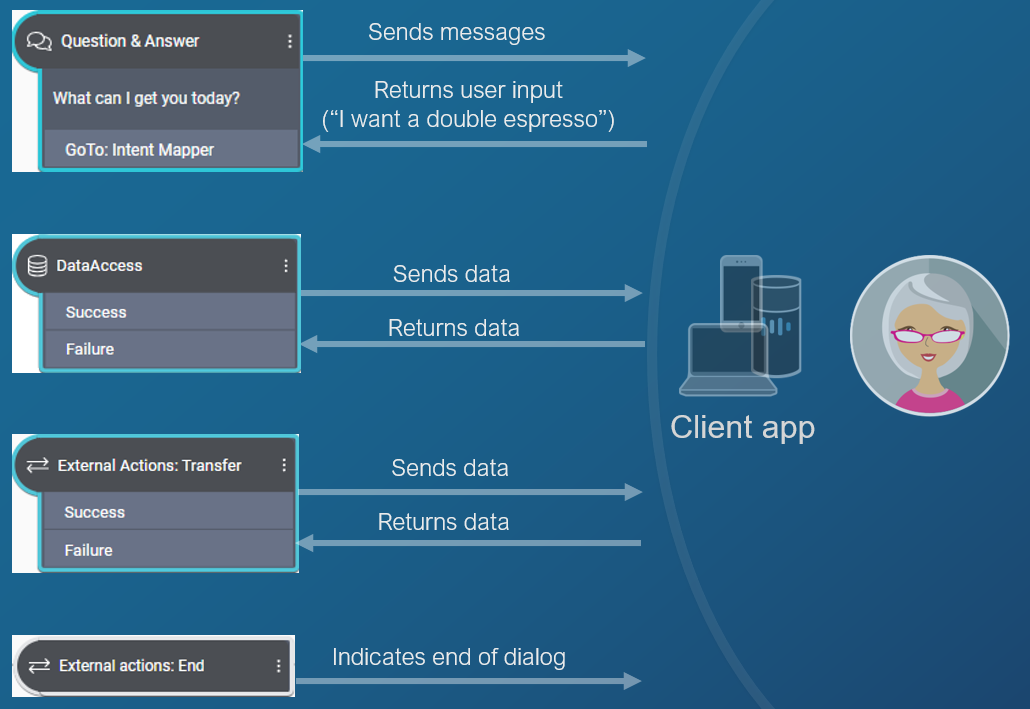

At specific points in the dialog, when the Dialog service requires input from the client application, it sends an action to the client app. In the context of DLGaaS, the following Mix.dialog nodes trigger a call to the DLGaaS API and send a corresponding action.

Message node

The message node defines message content to play to a user without seeking any input. The message specified in a message node is sent to the client application as a message action.

Note:

Message nodes do not trigger a call to the API by themselves. Rather they are accumulated and sent when one of the nodes below is encountered.See Message actions for details.

Question and answer

The objective of the question and answer node is to define a question to prompt collection of user input. It sends a message to the client application and expects the client application to collect and return user input. The user input can be speech audio, a text utterance, or a natural language understanding interpretation, or a selected item from an interactive list of options. For example, in the coffee app, the dialog may tell the client app to ask the user “What type of coffee would you like today” and then to return the user’s answer.

The message specified in a question and answer node is sent to the client application as a question and answer action. To continue the flow, the client application must collect input from the user in the current conversation language via one of the allowed input modalities and return the user input to the question and answer node.

See Question and answer actions for details.

Data access

A data access node expects data from a data source to continue the flow. The data source can either be a backend server or the client app, and this is configurable in Mix.dialog. For example, in a coffee app, the dialog may ask the client application to query the price of the order or to retrieve the name of the user.

When Mix.dialog is configured for client-side data access, information is sent to the client application in a data access action, identifying what data the Dialog service needs and providing any input data needed to retrieve that information. It also provides information to help the client application smooth over any delays while waiting for the data access. To continue the flow, the client application must return the requested data to DLGaaS.

See Data access actions for details.

When Mix.dialog is configured for server-side backend data access, DLGaaS sends the client application a continue action and awaits a response before proceeding with the data access. The continue action provides information to help the client application smooth over any delays waiting on the DLGaaS communicating with the server backend. To continue the flow, the client application must respond to DLGaaS.

See Continue actions for details.

External actions: Transfer and End

There are two types of external actions nodes:

- Transfer: This node triggers an escalation action to be sent to the client application; it can be used, for example, to escalate to a live agent. It sends data to the client application. To continue the flow, the client application must return a

returnCode, at a minimum. See Transfer actions for details. - End: This node triggers an end action to indicate the end of the dialog application. It does not expect a response from the client app. See End actions for details.

Languages, channels, and modalities

Depending on how the project for your dialog is defined in Mix and configured in Mix.dialog, your Dialog application will support one or more languages and channels. Each channel can support one or more modalities for playing system messages to the user and for collecting input from the user.

At runtime, a Dialog application will use one selected language and channel at any given point within the dialog.

Selecting language and channel

Many real world Dialog applications support multiple channels and languages. The client application needs to select which channel and language to use for an interaction via the API. This is done using a selector.

Selectors can be sent as part of a:

- StartRequest (at the start of a session)

- ExecuteRequest, whether a standalone ExecuteRequest or as part of a StreamInput (on each turn of the dialog)

A selector is the combination of:

- The channel through which you want messages to be transmitted to users, such as an IVR system, a live chat, a chatbot, and so on. The channel options available for an application are defined when creating a Mix project.

- The language to use for the interactions.

- The library to use for the interaction. (This is an advanced customization reserved for future use. Use the default value for now, which is

default.)

You do not need to send the selector at each interaction if you do not need to actively change the channel or language client-side. If the selector is not included on a turn, Dialog will simply proceed with whatever is currently set for the channel and language.

In the application, the settings to use for the selector might come from the user’s preset channel preferences/defaults, or from a user selection in the application UI during the session.

Channel and language information in response payloads

You can also configure your project in Mix.dialog to programatically switch channel and language during the conversation in response to conditions. The current settings for channel and language are returned during each turn within the API responses.

The current active channel is returned during each turn in the ExecuteResponse payload. The language is indicated for each of the messages contained within the response payload. When user input needs to be collected on a turn, the expected language is indicated in the QAAction message.

Channels and modalities

The client application is responsible for playing messages from the system to the user (for example, “What can I do for you today?”) and for collecting and returning the user input to the Dialog service (for example, “I want a cappuccino”).

Each channel can be configured on a project or question and answer node level with one or more input or output modalities. These configurations are made in Mix.dashboard and Mix.dialog.

Output modalities

System messages in a Dialog response are provided to the user for use with different output modalities. The output modalities that can potentially appear in a response message are limited to what is configured for a channel at the project level. Additionally, within this set of possibilities, the modalities that actually appear in a response message for a given channel depend on which ones have content configured in the message in Mix.dialog.

In general, output modalities can include any of the following:

- TTS: Generated speech audio from text-to-speech (TTS)

- Rich text: Text to be visually displayed, for example, in a text chat

- Audio script: Prerecorded audio file references to be played to the user

Input modalities

Input modalities define the ways input collection is supported for a channel at a point within the dialog. The allowed input modalities for a channel are configured at a project level. Additionally, within this set of possibilities, modalities can be disabled for a channel at a question and answer node level in Mix.dialog.

In general, input modalities can include any of the following:

- Text: Typed text

- Interactivity: Selecting from a list of options via an interactive element

- Voice: Speech audio

- DTMF: Selecting from a list of options or entering a string of characters via touchtone phone inputs

Returning user input to Dialog

The client app collects input from the user using one of the supported input modalities for the current channel.

The client application can then send the user input to the Dialog service in a few different ways depending on the allowed input modalities.

Orchestration with other Mix services

To support the Dialog service, different natural language and speech tasks will generally be required, depending on the channels your application is using and the types of input you are dealing with. You may need one or more of the following:

- Natural language understanding: For text inputs or speech recognition results, taking in a text string and interpreting the intent of the sentence and any entities

- Speech recognition: For speech inputs, taking in speech audio and returning a text transcription

- Text to speech: For speech applications, taking in a text script and for the dialog response and returning this to the user as synthesized speech audio

The Dialog service does not itself perform these tasks but relies on other services to carry them out.

The Mix platform offers a set of Conversational AI services to handle these tasks:

- NLUaaS: For natural language understanding

- ASRaaS: For speech recognition

- TTSaaS: For generating text-to-speech

Your client application can handle these tasks either with the Mix services, or by using third party services.

Server-side orchestration

Dialog service offers the possibility of special integration when using Mix services. Properly formatted requests sent to DLGaaS will automatically trigger calls to other Mix services. Rather than needing to separately call the other Mix services, Dialog can orchestrate with the other Mix services when requested, behind the scenes as follows. The Dialog service:

- Prepares and forwards a request to the specific Mix service

- Receives the response from the Mix service

- Prepares and forwards this response to the client application bundled as part of the standard DLGaaS response to the initial DLGaaS request

For orchestrated ASRaaS and TTSaaS requests, the DLGaaS service supports streaming of the audio input/output in both directions.

For more details about how to format inputs to trigger orchestration with Mix services, see Client app development.

Client-side orchestration

Alternatively, you can orchestrate outside Dialog with the other Mix services or even with third party tools rather than leaving it to Dialog.

The Dialog API returns resources and resource references for NLU, ASR, TTS, and NR (Nuance Recognizer) as part of QAAction messages in execute response payloads. These resources can be called upon in orchestration to tune the performance of the other Mix services.

For more details, see Resources for external orchestration.

Feedback

Was this page helpful?

Glad to hear it! Please tell us how we can improve.

Sorry to hear that. Please tell us how we can improve.