Client app development

The gRPC protocol for ASRaaS lets you create a client application for recognizing and transcribing speech. This section describes how to implement the basic functionality of ASRaaS in the context of a Python application.

Note:

For the complete application and how to run it yourself, see Sample recognition client.Sequence flow

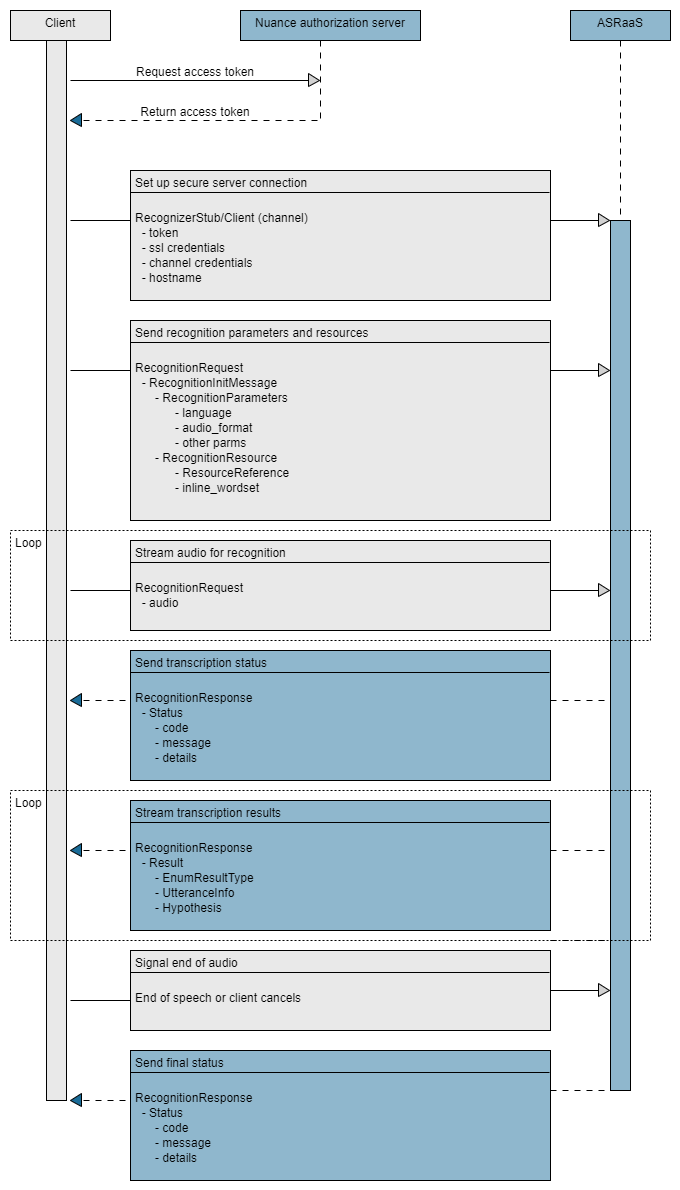

The essential tasks are illustrated in the following high-level sequence flow of a client application at runtime:

Development steps

Developing a client application involves several steps, from importing functions to processing the recognition results.

Authorize

Nuance Mix uses the OAuth 2.0 protocol for authorization. The client must provide an access token to be able to access the ASR runtime service. The token expires after a short period of time so must be regenerated frequently.

Your client uses your client ID and secret from Mix (see Prerequisites from Mix) to generate an access token from the Nuance authorization server.

The token may be generated in several ways, either as part of the client or separately.

In this example, a Linux shell script or Windows batch file generates a token, stores it in an environment variable, and passes it to the client. The script replaces the colons in the client ID with %3A so curl can parse the value correctly.

#!/bin/bash

CLIENT_ID=<Mix client ID, starting with appID:>

SECRET=<Mix client secret>

#Change colons (:) to %3A in client ID

CLIENT_ID=${CLIENT_ID//:/%3A}

MY_TOKEN="`curl -s -u "$CLIENT_ID:$SECRET" \

"https://auth.crt.nuance.com/oauth2/token" \

-d "grant_type=client_credentials" -d "scope=asr nlu tts dlg" \

| python -c 'import sys, json; print(json.load(sys.stdin)["access_token"])'`"

python3 reco-client.py asr.api.nuance.com:443 $MY_TOKEN $1@echo off

setlocal enabledelayedexpansion

set CLIENT_ID=<Mix client ID, starting with appID:>

set SECRET=<Mix client secret>

rem Change colons (:) to %3A in client ID

set CLIENT_ID=!CLIENT_ID::=%%3A!

set command=curl -s ^

-u %CLIENT_ID%:%SECRET% ^

-d "grant_type=client_credentials" -d "scope=asr nlu tts dlg" ^

https://auth.crt.nuance.com/oauth2/token

for /f "delims={}" %%a in ('%command%') do (

for /f "tokens=1 delims=:, " %%b in ("%%a") do set key=%%b

for /f "tokens=2 delims=:, " %%b in ("%%a") do set value=%%b

goto done:

)

:done

rem Remove quotes

set MY_TOKEN=!value:"=!

python reco-client.py asr.api.nuance.com:443 %MY_TOKEN% %1The client uses the token to create a secure connection to the ASRaaS service:

# Set arguments

hostaddr = sys.argv[1]

access_token = sys.argv[2]

audio_file = sys.argv[3]

# Create channel and stub

call_credentials = grpc.access_token_call_credentials(access_token)

ssl_credentials = grpc.ssl_channel_credentials()

channel_credentials = grpc.composite_channel_credentials(ssl_credentials, call_credentials)

Import functions

The client’s first step is to import all functions from the ASRaaS proto files or client stubs from gRPC setup, along with other utilities:

from nuance.asr.v1.resource_pb2 import *

from nuance.asr.v1.result_pb2 import *

from nuance.asr.v1.recognizer_pb2 import *

from nuance.asr.v1.recognizer_pb2_grpc import *

The proto files and stubs are in the following path under the location of the client: nuance/asr/v1/. Do not edit the proto or stub files.

Set recognition parameters

The client sets a RecognitionInitMessage containing RecognitionParameters, or parameters that define the type of recognition you want. Consult your generated stubs for the precise parameter names. Some parameters are:

-

Language and topic (mandatory): The locale of the audio to be recognized and a specialized language pack. Both values must match an underlying data pack. For available languages, see Geographies.

-

Audio format (mandatory): The codec of the audio and optionally the sample rate, 8000 or 16000 (Hz). The example extracts the sample rate from the audio file.

-

Result type: How results are streamed back to the client. This example sets IMMUTABLE_PARTIAL.

-

Utterance detection mode: Whether ASRaaS should transcribe one or all sentences in the audio stream. This example sets MULTIPLE, meaning all sentences.

-

Recognition flags: One or more true/false recognition parameters. The example sets

auto_punctuateto true, meaning the results will include periods, commas, and other punctuation.

For example:

# Set recognition parameters

def stream_out(wf):

try:

init = RecognitionInitMessage(

parameters = RecognitionParameters(

language = "en-US",

topic = "GEN",

audio_format = AudioFormat(pcm=PCM(sample_rate_hz=wf.getframerate())),

result_type = "IMMUTABLE_PARTIAL",

utterance_detection_mode = "MULTIPLE",

recognition_flags = RecognitionFlags(auto_punctuate=True)

)

)

RecognitionInitMessage may also include resources such as domain language models and wordsets, which customize recognition for a specific environment or business. See Add DLMs and/or wordsets below.

Call client stub

The client must include the location of the ASRaaS instance, the access token described in Authorize, and where the audio is obtained.

Using this information, the client calls a client stub function or class. In some languages, this stub is defined in the generated client files: in Python it is named RecognizerStub, in Go it is RecognizerClient, and in Java it is RecognizerStub:

# Set arguments, create channel, and call stub

try:

hostaddr = sys.argv[1]

access_token = sys.argv[2]

audio_file = sys.argv[3]

. . .

call_credentials = grpc.access_token_call_credentials(access_token)

ssl_credentials = grpc.ssl_channel_credentials()

channel_credentials = grpc.composite_channel_credentials(ssl_credentials, call_credentials)

with grpc.secure_channel(hostaddr, credentials=channel_credentials) as channel:

stub = RecognizerStub(channel)

stream_in = stub.Recognize(client_stream(wf))

Request recognition

After setting recognition parameters, the client sends the RecognitionRequest stream, including recognition parameters and the audio to process, to the channel and stub.

In this Python example, this is achieved with a two-part yield structure that first sends recognition parameters then sends the audio for recognition in chunks:

yield RecognitionRequest(recognition_init_params=init)

. . .

yield RecognitionRequest(audio=chunk)

Normally your client will send streaming audio to ASRaaS for processing. For simplicity, this client simulates streaming audio by breaking up an audio file into chunks and feeding it to ASRaaS a bit at a time:

# Request recognition and simulate audio stream

def client_stream(wf):

try:

init = RecognitionInitMessage(

parameters = RecognitionParameters(

language = 'en-US',

topic = 'GEN',

audio_format = AudioFormat(pcm=PCM(sample_rate_hz=wf.getframerate())),

result_type = 'FINAL',

utterance_detection_mode = 'MULTIPLE',

recognition_flags = RecognitionFlags(auto_punctuate=True)

)

)

yield RecognitionRequest(recognition_init_message = init)

print(f'stream {wf.name}')

packet_duration = 0.020

packet_samples = int(wf.getframerate() * packet_duration)

for packet in iter(lambda: wf.readframes(packet_samples), b''):

yield RecognitionRequest(audio=packet)

sleep(packet_duration)

Process results

Finally, the client returns the results received from the ASRaaS engine. This client prints the resulting transcript on screen as it is streamed from ASRaaS, sentence by sentence, with intermediate partial sentence results when the client has requested PARTIAL or IMMUTABLE_PARTIAL results.

The results may be long or short depending on the length of your audio, the recognition parameters, and the fields included by the client. See Results. This client returns the status followed by the best hypothesis of each utterance:

# Receive results and print selected fields

try:

# Iterate through messages returned from server

for message in stream_in:

if message.HasField('status'):

if message.status.details:

print(f'{message.status.code} {message.status.message} - {message.status.details}')

else:

print(f'{message.status.code} {message.status.message}')

elif message.HasField('result'):

restype = 'partial' if message.result.result_type else 'final'

print(f'{restype}: {message.result.hypotheses[0].formatted_text}')

except StreamClosedError:

pass

except Exception as e:

print(f'server stream: {type(e)}')

traceback.print_exc()

Result type IMMUTABLE_PARTIAL

This example shows the results from my audio file, monday_morning_16.wav, a 16kHz wave file talking about my commute into work. The audio file says:

It’s Monday morning and the sun is shining.

I’m getting ready to walk to the train and commute into work.

I’ll catch the seven fifty-seven train from Cedar Park station.

It will take me an hour to get into town.

The result type in this example is IMMUTABLE_PARTIAL, meaning that partial results are delivered after a slight delay, to ensure that the recognized words do not change with the rest of the received speech.

To run this client, see Sample recognition client. The results are the same on Linux and Windows:

stream ../audio/monday_morning_16.wav

100 Continue - recognition started on audio/l16;rate=16000 stream

partial : It's Monday

partial : It's Monday morning and the

final : It's Monday morning and the sun is shining.

partial : I'm getting ready

partial : I'm getting ready to

partial : I'm getting ready to walk

partial : I'm getting ready to walk to the

partial : I'm getting ready to walk to the train and commute

final : I'm getting ready to walk to the train and commute into work.

partial : I'll catch

partial : I'll catch the

partial : I'll catch the 750

partial : I'll catch the 757 train from

final : I'll catch the 757 train from Cedar Park station.

partial : It will take

partial : It will take me an hour

partial : It will take me an hour to get

final : It will take me an hour to get into town.

stream complete

200 Success

See Recognition parameters in request for an example of result type PARTIAL.

Result type FINAL

This example transcribes the audio file weather16.wav, which talks about winter weather in Montreal. The file says:

There is more snow coming to the Montreal area in the next few days.

We’re expecting ten centimeters overnight and the winds are blowing hard.

Our radar and satellite pictures show that we’re on the western edge of the storm system as it continues to track further to the east.

The result type in the case is FINAL, meaning only the final version of each sentence is returned:

stream ../../audio/weather16.wav

100 Continue - recognition started on audio/l16;rate=16000 stream

stream complete

final: There is more snow coming to the Montreal area in the next few days

final: We're expecting 10 cm overnight and the winds are blowing hard

final: Radar and satellite pictures show that we're on the western edge of the storm system as it continues to track further to the east

200 Success

In both these examples, ASRaaS performs the recognition using only the data pack. For these simple sentences, the recognition is nearly perfect.

Add DLMs and/or wordsets

Once you have experimented with basic recognition, you can add resources such as domain language models and wordsets to improve recognition of specific terms and language in your environment.

See Domain LMs and Wordsets for complete details, but for a simple example you could add a standalone wordset containing unusual place names used in your business.

# Define a wordset containing places your users might saay

places_wordset = RecognitionResource(

inline_wordset = '{"PLACES":[{"literal":"La Jolla", "spoken":["la hoya","la jolla"]},

{"literal":"Llanfairpwllgwyngyll","spoken":["lan vire pool guin gill"]},

{"literal":"Abington Pigotts"},{"literal":"Steeple Morden"},

{"literal":"Hoyland Common"},{"literal":"Cogenhoe","spoken":["cook no"]},

{"literal":"Fordoun","spoken":["forden","fordoun"]},{"literal":"Llangollen",

"spoken":["lan goth lin","lan gollen"]},{"literal":"Auchenblae"}]}'

)

# Add recognition parms, plus the wordset in resourcees

init = RecognitionInitMessage(

parameters = RecognitionParameters(

language = 'en-US',

topic = 'GEN',

audio_format = AudioFormat(pcm=PCM(sample_rate_hz=16000)),

result_type = 'FINAL',

utterance_detection_mode = 'MULTIPLE'

),

resources = [places_wordset]

)

Before and after DLM and wordset

The audio file in this example, towns_16.wav, is a recording containing a variety of place names, some common and some unusual. The recording says:

I’m going on a trip to Abington Piggots in Cambridgeshire, England.

I’m speaking to you from the town of Cogenhoe [cook-no] in Northamptonshire.

We visited the village of Steeple Morden on our way to Hoyland Common in Yorkshire.

We spent a week in the town of Llangollen [lan-goth-lin] in Wales.

Have you ever thought of moving to La Jolla [la-hoya] in California.

Without a DLM or wordset, the unusual place names are not recognized correctly.

stream ../audio/towns_16.wav

100 Continue - recognition started on audio/l16;rate=16000 stream

final : I'm going on a trip to Abington tickets in Cambridgeshire England.

final : I'm speaking to you from the town of cooking out in Northamptonshire.

final : We visited the village of steeple Morton on our way to highland common in Yorkshire.

final : We spent a week in the town of land Gosling in Wales.

final : Have you ever thought of moving to La Jolla in California.

stream complete

200 Success

But when the place names are defined in a wordset, there is perfect recognition:

stream ../audio/towns_16.wav

100 Continue - recognition started on audio/l16;rate=16000 stream

final : I'm going on a trip to Abington Piggots in Cambridgeshire England.

final : I'm speaking to you from the town of Cogenhoe in Northamptonshire.

final : We visited the village of Steeple Morden on our way to Hoyland Common in Yorkshire.

final : We spent a week in the town of Llangollen in Wales.

final : Have you ever thought of moving to La Jolla in California.

stream complete

200 Success

This wordset provides definitions of the unusual place names. See Wordsets to define wordsets.

{

"PLACES": [

{ "literal":"La Jolla",

"spoken":[ "la hoya","la jolla" ] },

{ "literal":"Llanfairpwllgwyngyll",

"spoken":[ "lan vire pool guin gill" ] },

{ "literal":"Abington Pigotts" },

{ "literal":"Steeple Morden" },

{ "literal":"Hoyland Common" },

{ "literal":"Cogenhoe",

"spoken":[ "cook no" ] },

{ "literal":"Fordoun",

"spoken":[ "forden","fordoun" ] },

{ "literal":"Llangollen",

"spoken":[ "lan goth lin","lan gollen" ] },

{ "literal":"Auchenblae" }

]

}

Feedback

Was this page helpful?

Glad to hear it! Please tell us how we can improve.

Sorry to hear that. Please tell us how we can improve.