Wordsets

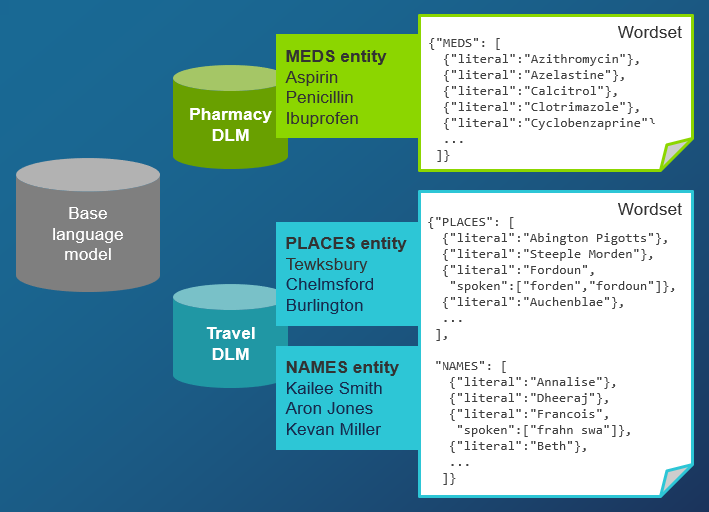

A wordset is a collection of words and short phrases that extends the ASRaaS recognition vocabulary.

A wordset typically provides additional values for entities defined in a DLM. For example, a wordset might extend the MEDS entity with more drug names. Another wordset might extend both PLACES and NAMES entities with additional locations and names.

Alternatively, a wordset may be a standalone collection of terms not tied to DLM entities.

To use a wordset in ASRaaS, declare it in RecognitionInitMessage: RecognitionResource, either as an inline wordset or a compiled wordset.

Defining wordsets

A wordset is defined in JSON format as a one or more arrays. Each array has either a descriptive name, or is named after a DLM entity to which words can be added at runtime.

For example, you might have an entity, PLACES, with place names used by the application, or NAMES, containing personal names. When the wordset extends an entity, it adds to the existing terms in the entity, but applies only to the current recognition session. The terms in the wordset are not added permanently to the entity.

If you are creating a wordset in another language, leave the keywords literal and spoken in English, and add values in the other language.

This wordset provides place names:

{

"PLACES" : [

{ "literal" : "La Jolla",

"spoken" : ["la hoya","la jolla"] },

{ "literal" : "Llanfairpwllgwyngyll",

"spoken" : ["lan vire pool guin gill"] },

{ "literal" : "Abington Pigotts" },

{ "literal" : "Steeple Morden" },

{ "literal" : "Hoyland Common" },

{ "literal" : "Cogenhoe",

"spoken" : ["cook no"] },

{ "literal" : "Fordoun",

"spoken" : ["forden","fordoun"] },

{ "literal" : "Llangollen",

"spoken" : ["lan goth lin","lan gollen"] },

{ "literal" : "Auchenblae" }

]

}

This wordset provides both places and personal names:

{

"PLACES" : [

{ "literal" : "Abington Pigotts" },

{ "literal" : "Steeple Morden" },

{ "literal" : "Hoyland Common" },

{ "literal" : "Cogenhoe", "spoken" : ["cook no"] },

{ "literal" : "Fordoun", "spoken" : ["forden","fordoun"] },

{ "literal" : "Llangollen", "spoken" : ["lan goth lin","lan gollen"] },

{ "literal" : "Auchenblae" }

],

"NAMES": [

{ "literal" : "Annalise" },

{ "literal" : "Cynthia" },

{ "literal" : "Dheeraj" },

{ "literal" : "Swapnil" },

{ "literal" : "Florent", "spoken" : ["floor ah"] },

{ "literal" : "Remi" },

{ "literal" : "Francois", "spoken" : ["frahn swa"] },

{ "literal" : "Mukesh" },

{ "literal" : "Youssef" },

{ "literal" : "Oliver" }

]

}

See Wordset syntax for a full description of the wordset format.

See Before and after DLM and wordset to see the difference that a wordset can make on recognition.

Wordset limits

ASRaaS supports inline source wordsets and compiled wordsets. You can either provide the source wordset in the request or reference a compiled wordset using its URN in the Mix environment.

There is no fixed limit for the size and number of inline wordsets, but for performance reasons a maximum of 100 entries per wordset is recommended, and 10 inline wordsets per recognition request.

Each recognition request allows five compiled wordsets for each reuse setting (LOW_REUSE and HIGH_REUSE).

When creating a compiled wordset, the request message has a maximum size of 4 MB. A gRPC error is generated if you exceed this limit:

<_Rendezvous of RPC that terminated with:

status = StatusCode.RESOURCE_EXHAUSTED

details = "Received message larger than max (10329860 vs. 4194304)"

debug_error_string = "{"created":"@1618234583.079000000","description":

"Error received from peer ipv4...",

"file":"src/core/lib/surface/call.cc",

"file_line":1055,

"grpc_message":"Received message larger than max (10329860 vs. 4194304)",

"grpc_status":8}"

When using a wordset that extends an entity in a DLM, you must load the DLM along with the wordset.

Inline wordsets

You may define a wordset directly in the recognition request or read it from a local file using a programming language function.

To use a source wordset, specify it as inline_wordset in RecognitionResource. Include the JSON definition directly in the inline_wordset field, compressed (without spaces) and enclosed in single quotation marks:

# Optionally declare DLM that contains the entity to extend

travel_dlm = RecognitionResource(

external_reference = ResourceReference(

type = 'DOMAIN_LM',

uri = 'urn:nuance-mix:tag:model/names-places/mix.asr?=language=eng-USA'

)

)

# Define the wordset inline, using compressed JSON

places_wordset = RecognitionResource(

inline_wordset = '{"PLACES":[{"literal":"La Jolla","spoken":["la hoya","la jolla"]},{"literal":"Llanfairpwllgwyngyll","spoken":["lan vire pool guin gill"]},{"literal":"Abington Pigotts"},{"literal":"Steeple Morden"},{"literal":"Hoyland Common"},{"literal":"Cogenhoe","spoken":["cook no"]},{"literal":"Fordoun","spoken":["forden","fordoun"]},{"literal":"Llangollen","spoken":["lan goth lin","lan gollen"]},{"literal":"Auchenblae"}]}',

weight_value = 0.2)

# Include the wordset (and optionally the DLM) in RecognitionInitMessage

init = RecognitionInitMessage(

parameters = RecognitionParameters(...),

resources = [travel_dlm, places_wordset]

)

Alternatively, store the source wordset in a local JSON file and read the file (places-wordset.json) with a programming-language function:

# Declare DLM containing the entity to extend

travel_dlm = RecognitionResource(

external_reference = ResourceReference(

type = 'DOMAIN_LM',

uri = 'urn:nuance-mix:tag:model/names-places/mix.asr?=language=eng-USA'

)

)

# Read wordset content from local file

places_wordset_content = None

with open('places-wordset.json', 'r') as f:

places_wordset_content = f.read()

places_wordset = RecognitionResource(

inline_wordset = places_wordset_content)

# Include both DLM and wordset in RecognitionInitMessage

init = RecognitionInitMessage(

parameters = RecognitionParameters(...),

resources = [travel_dlm, places_wordset]

)

Optionally give the wordset a weight with weight_value or weight_enum, which applies to all wordsets in the recognition. The default weight is LOW or 0.1. See Resource weights.

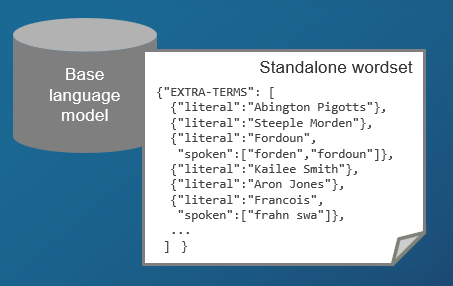

Standalone wordsets

For best recogntion results, a wordset should extend an entity defined in a DLM by providing additional terms in the same catetgory. Associating a wordset with a DLM entity means that the ASRaaS engine understands the context in which the words may occur, and can distinguish homophones, or words that sound the same but are spelled differently.

You may also use wordsets that are collections of terms not tied to a DLM entity. These standalone wordsets are simple to use, as they don’t require a DLM, and give good results in many situations.

Only source wordsets may be used standalone. Compiled wordsets always require a DLM and entity.

This example uses a standalone wordset to add additional place names to the recognition environment.

# Define the wordset inline, using compressed JSON

places_wordset = RecognitionResource(

inline_wordset = '{"PLACES":[{"literal":"La Jolla","spoken":["la hoya","la jolla"]},{"literal":"Llanfairpwllgwyngyll","spoken":["lan vire pool guin gill"]},{"literal":"Abington Pigotts"},{"literal":"Steeple Morden"},{"literal":"Hoyland Common"},{"literal":"Cogenhoe","spoken":["cook no"]},{"literal":"Fordoun","spoken":["forden","fordoun"]},{"literal":"Llangollen","spoken":["lan goth lin","lan gollen"]},{"literal":"Auchenblae"}]}',

weight_value = 0.2)

# Include the wordset (but not a DLM) in RecognitionInitMessage

init = RecognitionInitMessage(

parameters = RecognitionParameters(...),

resources = [places_wordset]

)

Compiled wordsets

Instead of an inline wordset, you may reference a compiled wordset that was created with the Training API. To use a compiled wordset, specify it in ResourceReference as COMPILED_WORDSET and provide its URN in the Mix environment.

Optionally give it a weight with weight_value or weight_enum, which applies to all wordsets in the recognition. The default weight is LOW or 0.1. See Resource weights.

When referencing a compiled wordset, you must also load its companion DLM. For example, this compiled wordset extends the PLACES entity in the DLM with travel locations:

# Declare DLM as before

travel_dlm = RecognitionResource(

external_reference = ResourceReference(

type = 'DOMAIN_LM',

uri = 'urn:nuance-mix:tag:model/names-places/mix.asr?=language=eng-USA'

)

)

# Declare a compiled wordset (here its context tag is the same as the DLM)

places_compiled_ws = RecognitionResource(

external_reference = ResourceReference(

type = 'COMPILED_WORDSET',

uri = 'urn:nuance-mix:tag:wordset:lang/names-places/places-compiled-ws/eng-USA/mix.asr'

),

weight_value = 0.2

)

# Include both DLM and wordset in RecognitionInitMessage

init = RecognitionInitMessage(

parameters = RecognitionParameters(...),

resources = [travel_dlm, places_compiled_ws]

)

Inline or compiled?

You may use either inline or compiled wordsets to aid in recognition. The size of your wordset often dictates the best form:

-

Small wordsets, containing 100 or fewer terms, are suitable for inline use. You can include these with each recognition request at runtime. The wordset is compiled behind the scenes and applied as a resource.

-

Larger wordsets can be compiled ahead of time using the Training API. The compiled wordset is stored in Mix and can then be referenced and loaded as an external resource runtime. This strategy improves latency significantly for large wordsets.

If you are unsure of which approach to take, test the latency when using wordsets inline.

Wordset URNs

To compile a wordset, you must provide a URN for the wordset and for the DLM that it extends. See URN format of resources.

The URN for the wordset has one of these forms, depending on the level of the wordset:

-

Application-level wordset. These wordsets apply to all users of an application, for example, a company’s employee directory, a movie list, product names, or travel destinations:

urn:nuance-mix:tag:wordset:lang/context_tag/wordset_name/lang/mix.asr -

User-level wordset. These wordsets apply to a specific user of an application, for example, a contact list, patient list, music playlist, and so on:

urn:nuance-mix:tag:wordset:lang/context_tag/wordset_name/lang/mix.asr?=user_id=user_id

| Syntax | |

|---|---|

| context_tag | An application context tag. This can be an existing wordset context tag or a new context tag that will be created. For clarity, we recommend you use the same context tag as the wordset’s companion DLM. |

| wordset_name | A name for the wordset. When compiling a wordset, this is a new name for the wordset being created. |

| lang | The language and locale of the underlying data pack. |

| user_id | A unique identifier for the user. |

Once the wordset is compiled, it is stored on Mix and can be referenced at runtime by a client application using the same DLM and wordset URNs. For example, these are the URNs for a wordset and its companion DLM.

Domain LM: urn:nuance-mix:tag:model/names-places/mix.asr?=language=eng-USA

Compiled wordset: urn:nuance-mix:tag:wordset:lang/names-places/places-compiled-ws/eng-USA/mix.asr

Scope of compiled wordsets

The wordset must be compatible with the companion DLM, meaning it must have the same locale and reference entities in the DLM.

The context tag used for the wordset does not have to match the context tag of the companion DLM but it may provide easier wordset management to use the same context tag for both DLM and its associated wordsets.

Both ASRaaS and NLU both provide a similar API for wordsets, but they are compiled and stored separately for both engines. If your application uses both services and requires large wordsets for both, you must compile them separately for each service.

Wordset lifecycle

Wordsets are available for 28 days after compilation, after which they are automatically deleted and must be compiled again.

Existing compiled wordsets can be updated. Compiling a wordset using an existing wordset URN replaces the existing wordset with the newer version if:

-

The source wordset definition is different.

-

The wordset’s time to live (TTL) has almost expired, meaning it is nearing the end of its 28-day lifecycle.

-

The URN of the companion DLM is different.

-

The companion DLM has been updated with new content or its underlying data pack has been updated to a new version.

-

Otherwise, the wordset compilation request returns a status ALREADY_EXISTS and the existing wordset remains usable at runtime.

Wordsets can also be manually deleted if no longer needed. Once deleted, a wordset is completely removed and cannot be restored.

If a recognition request includes an incompatible or missing wordset, the ResourceReference: mask_load_failures parameter determines whether the request fails or succeeds. When mask_load_failures is true, incompatible or missing wordsets are ignored and the recognition continues without error.

Wordset syntax

The JSON syntax for defining a wordset is:

{

"name-1" : [

{ "literal": "written form",

"spoken": ["spoken form 1", "spoken form n"]

},

{ "literal": "written form",

"spoken": ["spoken form 1", "spoken form n"]

},

...

],

"name-n": [ ... ]

}

Where:

| Field | Type | Description |

|---|---|---|

| name | String | A descriptive name for the wordset, or the name of an entity defined in a DLM. The name is case-sensitive. Consult the DLM training material for entity names. The wordset may contain terms for multiple entities. |

| literal | String | The written form of the value that ASRaaS will return in the Hypothesis: formatted_text fields. |

| spoken | Array | (Optional) One or more spoken forms of the value. When not supplied, ASRaaS guesses the pronunciation of the word from the literal. Include a spoken form only if the literal is difficult to pronounce or has an unusual pronunciation in the language. |

Wordsets can also include a “canonical” field used by NLUaaS for interpretation. This field is ignored by ASRaaS.

When a spoken form is supplied, it is the only source for recognition: the literal is not considered. If the literal pronunciation is also valid, you should include it as a spoken form. For example, the city of Worcester is pronounced wuster, but users reading it on a map may say it literally, as worcester. To allow ASRaaS to recognize both forms, specify:

{ "literal" : "Worcester", "spoken" : ["wuster", "worcester"] }

Wordsets may not contain the characters < and >. For this wordset, for example:

{

"PIZZA" : [

{ "literal" : "<Neapolitan>" },

{ "literal" : "Chicago" },

. . .

ASRaaS generates the following error:

400 Bad request - Error validating wordset: Invalid characters in wordset.

Characters < and > are not allowed.

Other special characters and punctuation may affect recognition and should be avoided where possible in both the literal and spoken fields.

The literal field may contain special characters such as !, ?, &, %, and so on, if they are an essential part of the word or phrase. In this case, also include a spoken form without special characters, for example:

{ "literal" : "ExtraMozz!!", "spoken": ["extra moz"] }

ASRaaS includes the special characters in the return value, for example, when the user says “I’d like to order an extra moz pizza”:

hypotheses {

formatted_text: "I\'d like to order an ExtraMozz!! pizza"

minimally_formatted_text: "I\'d like to order an ExtraMozz!! pizza"

Feedback

Was this page helpful?

Glad to hear it! Please tell us how we can improve.

Sorry to hear that. Please tell us how we can improve.