Recognizer gRPC API

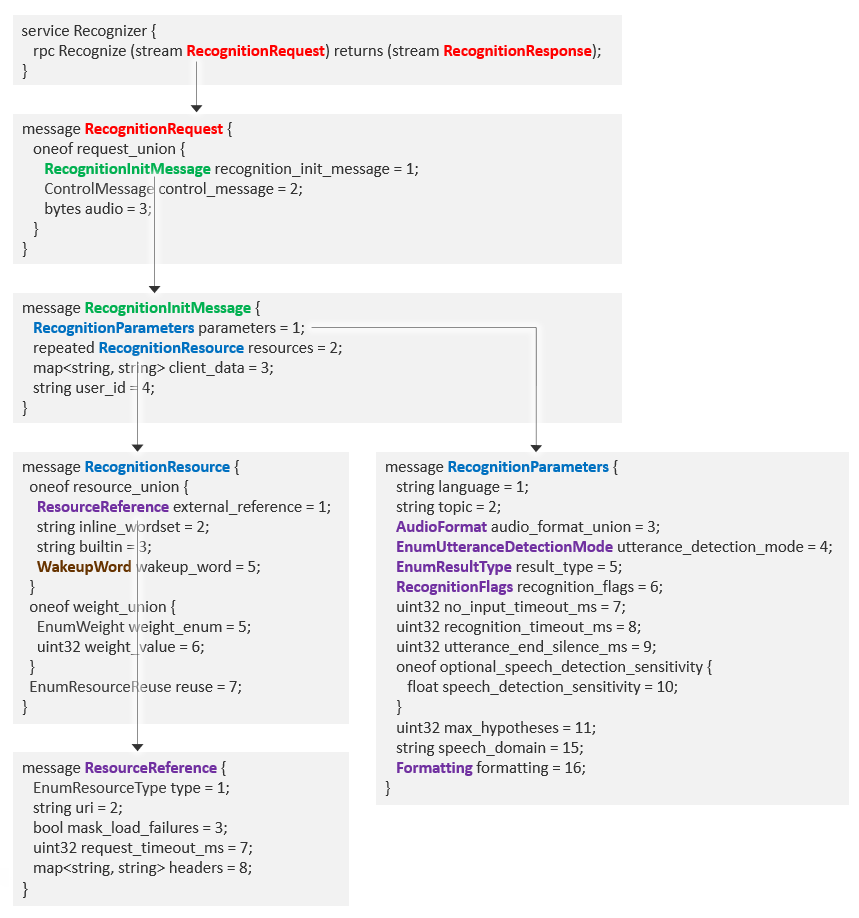

The Recognizer gRPC API contains methods for requesting speech recognition.

Tip:

Try out this API using a Sample recognition client that you can download and run.Proto file structure

ASRaaS provides protocol buffer (.proto) files to define Nuance’s ASR Recognizer service for gRPC. These files contain the building blocks of your speech recognition applications. See gRPC setup to download these proto files.

- recognizer.proto contains the main Recognize streaming service.

- resource.proto contains recognition resources such as domain language models and wordsets.

- result.proto contains the recognition results that ASRaaS streams back to the client application.

- The RPC files contain status and error messages used by other Nuance APIs.

└── nuance

├── asr

│ └── v1

│ ├── recognizer.proto

│ ├── resource.proto

│ └── result.proto

└── rpc (RPC message files)

The proto files define a Recognizer service with a Recognize method that streams a RecognitionRequest and RecognitionResponse. Details about each component are referenced by name within the proto file.

For the RPC fields, see RPC gRPC messages.

Recognizer

The Recognizer service offers one RPC method to perform streaming recognition. The method consists of a bidirectional streaming request and response message.

| Name | Request | Response | Description |

|---|---|---|---|

| Recognize | RecognitionRequest stream | RecognitionResponse stream | Starts a recognition request and returns a response. Both request and response are streamed. |

This example shows the Recognizer stub and Recognize method:

with grpc.secure_channel(hostaddr, credentials=channel_credentials) as channel:

stub = RecognizerStub(channel)

stream_in = stub.Recognize(client_stream(wf))

RecognitionRequest

Input stream messages that request recognition, sent one at a time in a specific order. The first mandatory field sends recognition parameters and resources, the final field sends audio to be recognized. Included in Recognize method.

| Field | Type | Description |

|---|---|---|

| One of: | ||

| recognition_init_message | Recognition InitMessage | Mandatory. First message in the RPC input stream, sends parameters and resources for recognition. |

| control_message | Control Message | Optional second message in the RPC input stream, for timer control. |

| audio | bytes | Mandatory. Subsequent message containing audio samples in the selected encoding for recognition. See AudioFormat. |

ASRaaS is a real-time service and audio should be streamed at a speed as close to real time as possible. For the best recognition results, we recommend an audio chunk size of 20 to 100 milliseconds.

The RecognitionRequest message includes:

RecognitionRequest

recognition_init_message (RecognitionInitMessage)

parameters (RecognitionParameters)

resources (RecognitionResource)

client_data

user_id

control_message (ControlMessage)

audio

This RecognitionRequest example sends a recognition init message and then audio to be transcribed:

def client_stream(wf):

try:

# Start the recognition

init = RecognitionInitMessage(. . .)

yield RecognitionRequest(recognition_init_message = init)

# Simulate a typical realtime audio stream

print(f'stream {wf.name}')

packet_duration = 0.020

packet_samples = int(wf.getframerate() * packet_duration)

for packet in iter(lambda: wf.readframes(packet_samples), b''):

yield RecognitionRequest(audio=packet)

For a control_message example, see Timers and timeouts.

RecognitionInitMessage

Input message that initiates a new recognition turn. Included in RecognitionRequest.

| Field | Type | Description |

|---|---|---|

| parameters | Recognition Parameters | Mandatory. Language, audio format, and other recognition parameters. |

| resources | repeated Recognition Resource | One or more resources (DLMs, wordsets, builtins) to improve recognition. |

| client_data | map<string, string> | Map of client-supplied key, value pairs to inject into the call log. |

| user_id | string | Identifies a specific user within the application. |

The RecognitionInitMessage message includes:

RecognitionRequest

recognition_init_message (RecognitionInitMessage)

parameters (RecognitionParameters)

language

topic

audio_format

utterance_detection_mode

result_type

etc.

resources (RecognitionResource)

external_reference

type

uri

inline_wordset

builtin

inline_grammar

weight_enum | weight_value

reuse

client_data

user_id

This RecognitionInitMessage example includes many optional fields:

RecognitionInitMessage(

parameters = RecognitionParameters(

language = 'en-US',

topic = 'GEN',

audio_format = AudioFormat(

pcm = PCM(

sample_rate_hz=wf.getframerate()

)

),

result_type = 'FINAL',

utterance_detection_mode = 'MULTIPLE',

recognition_flags = RecognitionFlags(auto_punctuate=True)

),

resources = [travel_dlm, places_wordset],

client_data = {'company':'Aardvark','user':'James'},

user_id = 'james.somebody@aardvark.com'

)

This minimal RecognitionInitMessage contains just the mandatory fields:

RecognitionInitMessage(

parameters = RecognitionParameters(

language = 'en-US',

audio_format = AudioFormat(pcm=PCM(sample_rate_hz=16000))

)

)

RecognitionParameters

Input message that defines parameters for the recognition process. Included in RecognitionInitMessage.

The language and audio_format parameters are mandatory. All others are optional.

See Defaults for a list of default values.

If the request includes a DLM, both request and DLM must use the same language and topic. See Language in request and DLM.

| Field | Type | Description |

|---|---|---|

| language | string | Mandatory. Language and region (locale) code as xx-XX or xxx-XXX, for example, en-US or eng-USA. Not case-sensitive. |

| topic | string | Specialized language model in data pack. Case-sensitive, uppercase. Default is GEN (general). |

| audio_format | AudioFormat | Mandatory. Audio codec type and sample rate. |

| utterance_detection_mode | EnumUtterance DetectionMode | How many utterances within the audio stream are processed. Default SINGLE. |

| result_type | EnumResultType | The level of recognition results. Default FINAL. |

| recognition_flags | RecognitionFlags | Boolean recognition parameters. |

| no_input_timeout_ms | uint32 | Maximum silence, in ms, allowed while waiting for user input after recognition timers are started. Default (0) means server default, usually no timeout. See Timers and timeouts. |

| recognition_timeout_ms | uint32 | Maximum duration, in ms, of recognition turn. Default (0) means server default, usually no timeout. See Timers and timeouts. |

| utterance_end_silence_ms | uint32 | Minimum silence, in ms, that determines the end of an utterance. Default (0) means server default, usually 500ms or half a second. See Timers and timeouts. |

| speech_detection_sensitivity | float | A balance between detecting speech and noise (breathing, etc.), 0 to 1. 0 means ignore all noise, 1 means interpret all noise as speech. Default is 0.5. |

| max_hypotheses | uint32 | Maximum number of n-best hypotheses to return. Default (0) means server default, usually 10 hypotheses. |

| speech_domain | string | Mapping to internal weight sets for language models in the data pack. Values depend on the data pack. |

| formatting | Formatting | Formatting keywords. |

The RecognitionParameters message includes:

RecognitionRequest

recognition_init_message (RecognitionInitMessage)

parameters (RecognitionParameters)

language

topic

audio_format (AudioFormat):

pcm|alaw|ulaw|opus|ogg_opus

utterance_detection_mode (EnumUtteranceDetectionMode): SINGLE|MULTIPLE|DISABLED

result_type (EnumResultType): FINAL|PARTIAL|IMMUTABLE_PARTIAL

recognition_flags (RecognitionFlags):

auto_punctuate

filter_profanity

mask_load_failures

etc.

no_input_timeout_ms

recognition_timeout_ms

utterance_end_silence_ms

speech_detection_sensitivity

max_hypotheses

speech_domain

formatting (Formatting)

This RecognitionParameters example includes recognition flags:

RecognitionInitMessage(

parameters = RecognitionParameters(

language = 'en-US',

topic = 'GEN',

audio_format = AudioFormat(

pcm = PCM(

sample_rate_hz = wf.getframerate()

)

),

result_type = 'PARTIAL',

utterance_detection_mode = 'MULTIPLE',

recognition_flags = RecognitionFlags(

auto_punctuate = True,

filter_wakeup_word = True

)

)

)

AudioFormat

Mandatory input message containing the audio format of the audio to transcribe. Included in RecognitionParameters.

| Field | Type | Description |

|---|---|---|

| One of: | ||

| pcm | PCM | Signed 16-bit little endian PCM, 8kHz or 16kHz. |

| alaw | ALaw | G.711 A-law, 8kHz. |

| ulaw | Ulaw | G.711 µ-law, 8kHz. |

| opus | Opus | RFC 6716 Opus, 8kHz or 16kHz. |

| ogg_opus | OggOpus | RFC 7845 Ogg-encapsulated Opus, 8kHz or 16kHz. |

This AudioFormat example sets PCM format, with alternatives shown in commented lines:

RecognitionInitMessage(

parameters = RecognitionParameters(

language = 'en-US',

topic = 'GEN',

audio_format = AudioFormat(

pcm = PCM(

sample_rate_hz = wf.getframerate()

)

),

# audio_format = AudioFormat(pcm = PCM()),

# audio_format = AudioFormat(pcm = PCM(sample_rate_hz = 16000)),

# audio_format = AudioFormat(alaw = Alaw()),

# audio_format = AudioFormat(ulaw = Ulaw()),

# audio_format = AudioFormat(opus = Opus(source_rate_hz = 16000)),

# audio_format = AudioFormat(ogg_opus = OggOpus(output_rate_hz = 16000)),

result_type = 'FINAL',

utterance_detection_mode = 'MULTIPLE'

)

)

PCM

Input message defining PCM sample rate. Included in AudioFormat.

| Field | Type | Description |

|---|---|---|

| sample_rate_hz | uint32 | Audio sample rate in Hertz: 0, 8000, 16000. Default 0, meaning 8000. |

Alaw

Input message defining A-law audio format. G.711 audio formats are set to 8kHz. Included in AudioFormat.

Ulaw

Input message defining µ-law audio format. G.711 audio formats are set to 8kHz. Included in AudioFormat.

Opus

Input message defining Opus packet stream decoding parameters. Included in AudioFormat.

| Field | Type | Description |

|---|---|---|

| decode_rate_hz | uint32 | Decoder output rate in Hertz: 0, 8000, 16000. Default 0, meaning 8000. |

| preskip_samples | uint32 | Decoder 48 kHz output samples to skip. |

| source_rate_hz | uint32 | Input source sample rate in Hertz. |

OggOpus

Input message defining Ogg-encapsulated Opus audio stream parameters. Included in AudioFormat.

| Field | Type | Description |

|---|---|---|

| output_rate_hz | uint32 | Decoder output rate in Hertz: 0, 8000, 16000. Default 0, meaning 8000. |

ASRaaS supports the Opus audio format, either raw Opus (RFC 6716) or Ogg-encapsulated Opus (RFC 7845). The recommended encoder settings for Opus for speech recognition are:

- Sampling rate: 16 kHz

- Complexity: 3

- Bitrate: 28kbps recommended (20 kbps minimum)

- Bitrate type: VBR (variable bitrate) or CBR (constant bitrate)

- Packet length: 20ms

- Encoder mode: SILK only mode

- With Ogg encapsulation, the maximum Ogg container delay should be <= 100 ms.

Please note that Opus is a lossy codec, so you should not expect recognition results to be identical to those obtained with PCM audio.

EnumUtteranceDetectionMode

Input field specifying how utterances should be detected and transcribed within the audio stream. Included in RecognitionParameters. The default is SINGLE. When the detection mode is DISABLED, the recognition ends only when the client stops sending audio.

| Name | Number | Description |

|---|---|---|

| SINGLE | 0 | Return recognition results for one utterance only, ignoring any trailing audio. Default. |

| MULTIPLE | 1 | Return results for all utterances detected in the audio stream. Does not support RecognitionParameters recognition_timeout_ms. |

| DISABLED | 2 | Return recognition results for all audio provided by the client, without separating it into utterances. The maximum allowed audio length for this detection mode is 30 seconds. Does not support RecognitionParameters no_input_timeout_ms, recognition_timeout_ms, or utterance_end_silence_ms. |

The MULTIPLE detection mode detects and recognizes each utterance in the audio stream:

RecognitionInitMessage(

parameters = RecognitionParameters(

language = 'en-US',

topic = 'GEN',

audio_format = AudioFormat(pcm=PCM(sample_rate_hz=wf.getframerate())),

result_type = 'PARTIAL',

utterance_detection_mode = 'MULTIPLE'

)

)

EnumResultType

Input and output field specifying how results for each utterance are returned.

As input in RecognitionParameters, EnumResultType specifies the desired result type: FINAL (default), PARTIAL, or IMMUTABLE_PARTIAL.

As output in Result, it indicates the actual result type being returned: FINAL, PARTIAL, or NOTIFICATIONS.

- For final results, the

result_typefield is not returned in Python applications, as FINAL is the default. - The PARTIAL result type is returned for both partial and immutable partial results.

- NOTIFICATIONS is returned when the service emits a SIGTERM signal, warning of an imminent termination.

For examples, see Results > Result type and Notifications in results.

| Name | Number | Description |

|---|---|---|

| FINAL | 0 | Only the final version of each utterance is returned. Default. |

| PARTIAL | 1 | Variable partial results are returned, followed by a final result. In response (Result), this value is used for partial and immutable partial results. |

| IMMUTABLE_PARTIAL | 2 | Stabilized partial results are returned, following by a final result. Used in request (RecognitionParameters) only, not in response (Result). |

| NOTIFICATIONS | 3 | In response (Result) only, a pseudo-result that carries only one or more notifications. ASRaaS instances emit a notification when they receive a SIGTERM signal. This warns the client of an imminent termination and the shutdown grace period. Details are returned in a Shutdown notification results are not tied to any partial or final results that may also be emitted. |

In a recognition request, the PARTIAL result type asks ASRaaS to return a stream of partial results, including corrections:

RecognitionInitMessage(

parameters = RecognitionParameters(

language = 'en-US',

topic = 'GEN',

audio_format = AudioFormat(pcm=PCM(sample_rate_hz=wf.getframerate())),

result_type = 'PARTIAL',

utterance_detection_mode = 'MULTIPLE'

)

)

The response to this request indicates the result type:

result {

result_type: PARTIAL

abs_start_ms: 840

abs_end_ms: 5600

...

}

In a recognition response, the NOTIFICATIONS result type reports an imminent shutdown:

result {

result_type: NOTIFICATIONS

notifications {

code: 1005

severity: SEVERITY_INFO

message {

locale: "en-US"

message: "Imminent shutdown."

message_resource_id: "1005"

}

data {

key: "timeout_ms"

value: "10000"

}

}

}

RecognitionFlags

Input message containing boolean recognition parameters. Included in RecognitionParameters. The default is false in all cases.

| Field | Type | Description |

|---|---|---|

| auto_punctuate | bool | Whether to add punctuation to the transcription of each utterance, if available for the language. Punctuation such as commas and periods (full stops) is applied based on the grammatical logic of the language, not on pauses in the audio. See Capitalization and punctuation. |

| filter_profanity | bool | Whether to mask known profanities as *** in the result, if available for the language. |

| include_tokenization | bool | Whether to include a tokenized recognition result. |

| stall_timers | bool | Whether to disable the no-input timer. By default, this timer starts when recognition begins. See Timers and timeouts. |

| discard_speaker_adaptation | bool | If speaker profiles are used, whether to discard updated speaker data. By default, data is stored. |

| suppress_call_recording | bool | Whether to redact transcription results in the call logs and disable audio capture. By default, transcription results, audio, and metadata are generated. |

| mask_load_failures | bool | When true, errors loading external resources are not reflected in the Status message and do not terminate recognition. They are still reflected in logs. To set this flag for a specific resource (compiled wordsets only), use mask_load_failures in ResourceReference. |

| suppress_initial_capitalization | bool | When true, the first word in a transcribed utterance is not automatically capitalized. This option does not affect words that are capitalized by definition, such as proper nouns and place names. See Capitalization and punctuation. |

| allow_zero_base_lm_weight | bool | When true, custom resources (DLMs, wordsets, and others) can use the entire weight space, disabling the base LM contribution. By default, the base LM uses at least 10% of the weight space. Even when true, words from the base LM are still recognized, but with lower probability. See Resource weights. |

| filter_wakeup_word | bool | Whether to remove the wakeup word from the final result. This field is ignored in some situations. See Wakeup words. |

| send_multiple_start_of_speech | bool | When true, send a StartOfSpeech message for each detected utterance. By default, StartOfSpeech is sent for first utterance only. |

Recognition flags are set within recognition parameters:

RecognitionInitMessage(

parameters = RecognitionParameters(

language = 'en-US',

topic = 'GEN',

audio_format = AudioFormat(pcm=PCM(sample_rate_hz=wf.getframerate())),

result_type = 'PARTIAL',

utterance_detection_mode = 'MULTIPLE',

recognition_flags = RecognitionFlags(

auto_punctuate = True,

filter_profanity = True,

suppress_initial_capitalization = True,

allow_zero_base_lm_weight = True,

filter_wakeup_word = True

send_multiple_start_of_speech = True

)

)

)

Capitalization and punctuation

When suppress_initial_capitalization is true, the first word in each utterance is left uncapitalized unless it’s a proper noun. For example:

final: it's Monday morning and the sun is shining

final: I'm getting ready to walk to the train and commute into work.

final: I'll catch the 757 train from Cedar Park station

final: it will take me an hour to get into town

When auto_punctuate is true, the utterance contains punctuation such as commas and periods, based on the grammar rules of the language. With this feature, the utterance may appear to contain multiple sentences. You’ll notice this effect when utterance_detection_mode is DISABLED, meaning the audio stream is treated as one utterance.

final: There is more snow coming to the Montreal area in the next few days. We're

expecting 10 cm overnight and the winds are blowing hard. Our radar and satellite

pictures show that we're on the western edge of the storm system as it continues

to track further to the east.

When auto_punctuate and suppress_initial_capitalization are both true, the first word in the utterance is not capitalized, but the first word after each sentence-ending punctuation is capitalized as normal. For example:

final: there is more snow coming to the Montreal area in the next few days. We're

expecting 10 cm overnight and the winds are blowing hard. Our radar and satellite

pictures show that we're on the western edge of the storm system as it continues

to track further to the east.

Formatting

Input message specifying how the results are presented, using keywords for formatting types and options supported by the data pack. Included in RecognitionParameters. See Formatted text.

| Field | Type | Description |

|---|---|---|

| scheme | string | Keyword for a formatting type defined in the data pack. |

| options | map<string, bool> | Map of key, value pairs of formatting options and values defined in the data pack. |

This example includes a formatting scheme (date) and several formatting options.

RecognitionInitMessage(

parameters = RecognitionParameters(

language = 'en-US',

topic = 'GEN',

audio_format = AudioFormat(pcm=PCM(sample_rate_hz=wf.getframerate())),

result_type = 'IMMUTABLE_PARTIAL',

utterance_detection_mode = 'MULTIPLE',

formatting = Formatting(

scheme = 'date',

options = {

'abbreviate_titles': True,

'abbreviate_units': False,

'censor_profanities': True,

'censor_full_words': True

}

)

)

)

ControlMessage

Input message that starts the recognition no-input timer. Included in RecognitionRequest. This setting is only effective if timers were disabled in the recognition request. See Timers and timeouts.

| Field | Type | Description |

|---|---|---|

| start_timers_message | StartTimers ControlMessage | Starts the recognition no-input timer. |

StartTimersControlMessage

Input message the client sends when starting the no-input timer. Included in ControlMessage.

RecognitionResource

Input message defining one or more recognition resources (domain LMs, wordsets, etc.) to improve recognition. Included in RecognitionInitMessage.

| Field | Type | Description |

|---|---|---|

| One of: | ||

| external_reference | Resource Reference | The resource is an external file. Mandatory for DLMs, compiled wordsets, speaker profiles, and settings files. |

| inline_wordset | string | Inline wordset JSON resource. Default empty, meaning no inline wordset. See Wordsets for the format. |

| builtin | string | Name of a builtin resource in the data pack. Default empty, meaning no builtins. See Builtins. |

| inline_grammar | string | Inline grammar, SRGS XML format. Default empty, meaning no inline grammar. For Nuance internal use only. |

| wakeup_word | WakeupWord | List of wakeup words. See Wakeup words. |

| One of: | Weight applies to DLMs, builtins, and wordsets. As dictated by gRPC rules, if both weight_enum and weight_value are provided, weight_value takes precedence. See Resource weights. | |

| weight_enum | EnumWeight | Keyword for weight relative to data pack. If DEFAULT_WEIGHT or not supplied, defaults to MEDIUM (0.25) for DLMs and builtins, to LOW (0.1) for wordsets. |

| weight_value | float | Weight relative to data pack as value from 0 to 1. If 0.0 or not supplied, defaults to 0.25 (MEDIUM) for DLMs and builtins, to 0.1 (LOW) for wordsets. |

| reuse | EnumResource Reuse | Whether the resource will be used multiple times. Default LOW_REUSE. |

The RecognitionResource message includes:

RecognitionRequest

recognition_init_message (RecognitionInitMessage)

parameters (RecognitionParameters)

resources (RecognitionResource)

external_reference (ResourceReference)

inline_wordset

builtin

inline_grammar

wakeup_word

weight_enum (EnumWeight): LOWEST to HIGHEST | weight_value

reuse (EnumResourceReuse): LOW_REUSE|HIGH_REUSE

This RecognitionResource example includes a DLM, two wordsets, and wakeup words:

# Declare a DLM (names-places is the context tag)

travel_dlm = RecognitionResource(

external_reference = ResourceReference(

type = 'DOMAIN_LM',

uri = 'urn:nuance-mix:tag:model/names-places/mix.asr?=language=eng-USA'

),

weight_value = 0.5

)

# Define an inline wordset for an entity in that DLM

places_wordset = RecognitionResource(

inline_wordset = '{"PLACES":[{"literal":"La Jolla","spoken":["la hoya"]},{"literal":"Llanfairpwllgwyngyll","spoken":["lan vire pool guin gill"]},{"literal":"Abington Pigotts"},{"literal":"Steeple Morden"},{"literal":"Hoyland Common"},{"literal":"Cogenhoe","spoken":["cook no"]},{"literal":"Fordoun","spoken":["forden"]},{"literal":"Llangollen","spoken":["lan-goth-lin","lhan-goth-luhn"]},{"literal":"Auchenblae"}]}',

weight_value = 0.25

)

# Declare an existing compiled wordset

places_compiled_ws = RecognitionResource(

external_reference = ResourceReference(

type = 'COMPILED_WORDSET',

uri = 'urn:nuance-mix:tag:wordset:lang/names-places/places-compiled-ws/eng-USA/mix.asr',

mask_load_failures = True

),

weight_value = 0.25

)

# Define wakeup words

wakeups = RecognitionResource(

wakeup_word = WakeupWord(

words = ["Hello Nuance", "Hey Nuance"]

)

)

# Include resources in RecognitionInitMessage

def client_stream(wf):

try:

init = RecognitionInitMessage(

parameters = RecognitionParameters(. . .),

resources = [travel_dlm, places_wordset, places_compiled_ws, wakeups]

)

ResourceReference

Input message for fetching an external DLM or settings file that exists in your Mix project, or for creating or updating a speaker profile. Included in RecognitionResource. See Domain LMs and Speaker profiles.

| Field | Type | Description |

|---|---|---|

| type | Enum ResourceType | Resource type. Default UNDEFINED_RESOURCE_TYPE. |

| uri | string | Location of the resource as a URN reference. See URN format. |

| mask_load_failures | bool | Applies to compiled wordsets only. When true, errors loading the wordset are not reflected in the Status message and do not terminate recognition. They are still reflected in logs. To apply this flag to all resources, use mask_load_failures in RecognitionFlags. |

| request_timeout_ ms | uint32 | Time to wait when downloading resources. Default (0) means server default, usually 10000ms or 10 seconds. |

| headers | map<string, string> | Map of HTTP cache-control directives, including max-age, max-stale, min-fresh, etc. For example, in Python: headers = {‘cache-control’: ‘max-age=604800, max-stale=3600’} |

This example includes several external references:

# Declare a DLM (names-places is the context tag)

travel_dlm = RecognitionResource(

external_reference = ResourceReference(

type = 'DOMAIN_LM',

uri = 'urn:nuance-mix:tag:model/names-places/mix.asr?=language=eng-USA'

),

weight_value = 0.5

)

# Declare a compiled wordset

places_compiled_ws = RecognitionResource(

external_reference = ResourceReference(

type = 'COMPILED_WORDSET',

uri = 'urn:nuance-mix:tag:wordset:lang/names-places/places-compiled-ws/eng-USA/mix.asr',

mask_load_failures = True

),

weight_value = 0.25

)

# Declare a settings file

settings = RecognitionResource(

external_reference = ResourceReference(

type = 'SETTINGS',

uri = 'urn:nuance-mix:tag:settings/names-places/asr'

)

)

# Declare a speaker profile (no URI)

speaker_profile = RecognitionResource(

external_reference = ResourceReference(

type = 'SPEAKER_PROFILE'

)

)

# Include selected resources in recognition

def client_stream(wf):

try:

init = RecognitionInitMessage(

parameters = RecognitionParameters(. . .),

resources = [travel_dlm, places_compiled_ws, settings, speaker_profile]

)

WakeupWord

One or more words or phrases that activate the application. Included in RecognitionResource. See the related parameter, RecognitionFlags: filter_wakeup_word. See also Wakeup words.

| Field | Type | Description |

|---|---|---|

| words | repeated string | One or more wakeup words. |

This defines wakeup words for the application and removes them from final results:

# Define wakeup words

wakeups = RecognitionResource(

wakeup_word = WakeupWord(

words = ["Hi Dragon", "Hey Dragon", "Yo Dragon"] )

)

# Add wakeups to resource list, filter in final results

def client_stream(wf):

try:

init = RecognitionInitMessage(

parameters = RecognitionParameters(

...

recognition_flags = RecognitionFlags(

filter_wakeup_word = True)

),

resources = [travel_dlm, places_wordset, wakeups]

)

EnumResourceType

Input field defining the content type of an external recognition resource. Included in ResourceReference.

| Name | Number | Description |

|---|---|---|

| UNDEFINED_RESOURCE_TYPE | 0 | Resource type is not specified. Client must always specify a type. |

| WORDSET | 1 | Resource is a plain-text JSON wordset. Not currently supported, although inline_wordset is supported. |

| COMPILED_WORDSET | 2 | Resource is a compiled wordset. See Compiled wordsets. |

| DOMAIN_LM | 3 | Resource is a domain LM. See Domain LMs. |

| SPEAKER_PROFILE | 4 | Resource is a speaker profile in a datastore. See Speaker profiles. |

| GRAMMAR | 5 | Resource is an SRGS XML file. Not currently supported. |

| SETTINGS | 6 | Resource is ASR settings metadata, including the desired data pack version. |

EnumWeight

Input field setting the weight of the resource relative to the data pack, as a keyword. Included in RecognitionResource. See weight_value to specify a numeric value.

See Resource weights.

| Name | Number | Description |

|---|---|---|

| DEFAULT_WEIGHT | 0 | Same effect as MEDIUM for DLMs and builtins, LOW for wordsets. |

| LOWEST | 1 | The resource has minimal influence on the recognition process, equivalent to weight_value 0.05. |

| LOW | 2 | The resource has noticeable influence, equivalent to weight_value 0.1. |

| MEDIUM | 3 | The resource has roughly an equal effect compared to the data pack, equivalent to weight_value 0.25. |

| HIGH | 4 | Words from the resource may be favored over words from the data pack, equivalent to weight_value 0.5. |

| HIGHEST | 5 | The resource has the greatest influence on the recognition, equivalent to weight_value 0.9. |

EnumResourceReuse

Input field specifying whether the domain LM or wordset will be used for one or many recognition turns. Included in RecognitionResource.

| Name | Number | Description |

|---|---|---|

| UNDEFINED_REUSE | 0 | Not specified: currently defaults to LOW_REUSE. |

| LOW_REUSE | 1 | The resource will be used for only one recognition turn. |

| HIGH_REUSE | 5 | The resource will be used for a sequence of recognition turns. |

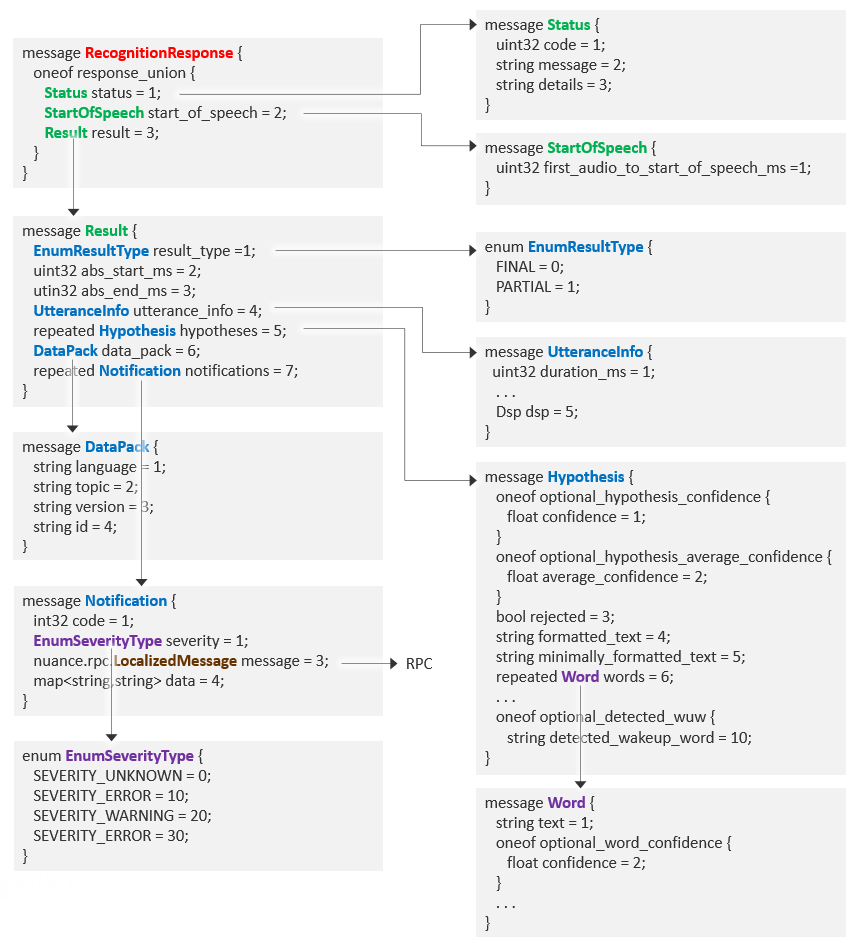

RecognitionResponse

Output stream of messages in response to a recognize request. Included in Recognize method.

| Field | Type | Description |

|---|---|---|

| status | Status | Always the first message returned, indicating whether recognition was initiated successfully. |

| start_of_speech | StartOfSpeech | When speech was detected. |

| result | Result | The partial or final recognition result. A series of partial results may preceed the final result. |

The response contains all possible fields of information about the recognized audio, and your application may choose to print all or some fields. The sample application prints only the status and the best hypothesis of each utterance, and other examples also include the data pack version and some DSP information.

Your application may instead print all fields to the user with (in Python) a simple print(message). In this scenario, the results contain the status, start-of-speech information, followed by the result itself, consisting overall information then several hypotheses of the utterance and its words, including confidence scores.

The response depends on two recognition parameters: result_type, which specifies how much of ASRaaS’s internal processing is reflected in the results, and utterance_detection_mode, which determines whether to process all utterances in the audio or just the first one.

The RecognitionResponse message includes:

RecognitionResponse

status (Status)

code

message

details

start_of_speech (StartOfSpeech)

first_audio_to_start_of_speech_ms

result (Result)

result_type (EnumResultType): FINAL|PARTIAL|NOTIFICATIONS

abs_start_ms

abs_end_ms

utterance_info (UtteranceInfo)

duration_ms

clipping_duration_ms

dropped_speech_packets

dropped_nonspeech_packets

dsp (Dsp)

digital signal processing results

hypotheses (Hypothesis)

confidence

average_confidence

rejected

formatted_text

minimally_formatted_text

words (Word)

text

confidence

start_ms

end_ms

silence_after_word_ms

grammar_rule

encrypted_tokenization

grammar_id

detected_wakeup_word

tokenization (Token)

data_pack (DataPack)

language

topic

version

id

This RecognitionResponse example prints selected fields from the results returned from ASRaaS:

try:

# Iterate through messages returned from server

for message in stream_in:

if message.HasField('status'):

if message.status.details:

print(f'{message.status.code} {message.status.message} - {message.status.details}')

else:

print(f'{message.status.code} {message.status.message}')

elif message.HasField('result'):

restype = 'partial' if message.result.result_type else 'final'

print(f'{restype}: {message.result.hypotheses[0].formatted_text}')

This prints all available fields from the message returned from ASRaaS:

try:

# Iterate through messages returned from server, returning all information

for message in stream_in:

print(message)

Status

Output message indicating the status of the job. Included in RecognitionResponse.

See Status codes for details about the codes. The message and details are developer-facing error messages in English. User-facing messages should be localized by the client based on the status code.| Field | Type | Description |

|---|---|---|

| code | uint32 | HTTP-style return code: 100, 200, 4xx, or 5xx as appropriate. |

| message | string | Brief description of the status. |

| details | string | Longer description if available. |

This example references and formats status messages:

try:

# Iterate through messages returned from server

for message in stream_in:

if message.HasField('status'):

if message.status.details:

print(f'{message.status.code} {message.status.message} - {message.status.details}')

else:

print(f'{message.status.code} {message.status.message}')

The output in this client is:

stream ../audio/weather16.wav

100 Continue - recognition started on audio/l16;rate=16000 stream

final: There is more snow coming to the Montreal area in the next few days

final: We're expecting 10 cm overnight and the winds are blowing hard

final: Our radar and satellite pictures show that we're on the western edge of the storm system as it continues to track further to the east

stream complete

200 Success

The full results look like this:

stream ../audio/weather16.wav

status {

code: 100

message: "Continue"

details: "recognition started on audio/l16;rate=16000 stream"

}

*** Results in here ***

stream complete

status {

code: 200

message: "Success"

}

StartOfSpeech

Output message containing the start-of-speech message. Included in RecognitionResponse.

| Field | Type | Description |

|---|---|---|

| first_audio_to_start_of_speech_ms | uint32 | Offset from start of audio stream to start of speech detected. |

By default, a start-of-speech message is sent only for the first utterance in the audio stream. The message is sent for each utterance when:

- RecognitionFlags send_multiple_start_of_speech is true, and

- EnumUtteranceDetectionMode is MULTIPLE, and

- There are several utterances in the audio stream.

The behavior is the same for all result types: FINAL, IMMUTABLE_PARTIAL, and PARTIAL. The start-of-speech message is sent as soon as some speech is detected for the current utterance, before any results.

This example sets utterance detection to MULTIPLE and includes the recognition flag that enables multiple start of speech messages:

init = RecognitionInitMessage(

parameters = RecognitionParameters(

language = 'en-us',

topic = 'GEN',

audio_format = AudioFormat(pcm=PCM(sample_rate_hz=wf.getframerate())),

result_type = FINAL,

utterance_detection_mode = MULTIPLE,

recognition_flags = RecognitionFlags(

filter_wakeup_word = True,

suppress_call_recording = True,

send_multiple_start_of_speech = True

),

The output shows the start of speech information for each utterance:

start_of_speech {

first_audio_to_start_of_speech_ms: 880

}

result {

abs_start_ms: 880

abs_end_ms: 5410

utterance_info {...}

hypotheses {

confidence: 0.414000004529953

average_confidence: 0.8600000143051147

formatted_text: "There is more snow coming to the Montreal area in the next few days"

...

}

start_of_speech {

first_audio_to_start_of_speech_ms: 5410

}

result {

abs_start_ms: 5410

abs_end_ms: 10280

utterance_info {...}

hypotheses {

confidence: 0.1420000046491623

average_confidence: 0.8790000081062317

formatted_text: "We\'re expecting 10 cm overnight and the winds are blowing hard"

...

}

start_of_speech {

first_audio_to_start_of_speech_ms: 10280

}

result {

abs_start_ms: 10280

abs_end_ms: 18520

utterance_info {...}

hypotheses {

confidence: 0.008999999612569809

average_confidence: 0.8730000257492065

formatted_text: "Our radar and satellite pictures show that we\'re on the western edge of the storm system as it continues to track further to the east"

...

}

Result

Output message containing the result, including the result type, the start and end times, metadata about the job, and one or more recognition hypotheses. Included in RecognitionResponse. For examples, see Results.

| Field | Type | Description |

|---|---|---|

| result_type | EnumResultType | Whether final results, partial results, or notifications are returned. |

| abs_start_ms | uint32 | Start time of the audio segment that generated this result. Offset, in milliseconds, from the beginning of the audio stream. |

| abs_end_ms | uint32 | End time of the audio segment that generated this result. Offset, in milliseconds, from the beginning of the audio stream. |

| utterance_info | UtteranceInfo | Information about each utterance. |

| hypotheses | repeated Hypothesis | One or more recognition variations. |

| data_pack | DataPack | Data pack information. |

| notifications | Notification | List of errors or warnings that don’t trigger run-time errors, if any. |

This prints only a few fields from the result: the status and the formatted text of the best hypothesis:

try:

# Iterate through messages returned from server

for message in stream_in:

if message.HasField('status'):

...

elif message.HasField('result'):

restype = 'partial' if message.result.result_type else 'final'

print(f'{restype}: {message.result.hypotheses[0].formatted_text}')

This prints all fields.

try:

# Iterate through messages returned from server

for message in stream_in:

print(message)

See Results and Formatted text for examples of output in different formats. For other examples, see Dsp, Hypothesis, and DataPack.

UtteranceInfo

Output message containing information about the transcribed utterance in the result. Included in Result.

| Field | Type | Description |

|---|---|---|

| duration_ms | uint32 | Utterance duration in milliseconds. |

| clipping_duration_ms | uint32 | Milliseconds of clipping detected. |

| dropped_speech_packets | uint32 | Number of speech audio buffers discarded during processing. |

| dropped_nonspeech_packets | uint32 | Number of non-speech audio buffers discarded during processing. |

| dsp | Dsp | Digital signal processing results. |

Dsp

Output message containing digital signal processing results. Included in UtteranceInfo.

| Field | Type | Description |

|---|---|---|

| snr_estimate_db | float | The estimated speech-to-noise ratio. |

| level | float | Estimated speech signal level. |

| num_channels | uint32 | Number of channels. Default is 1, meaning mono audio. |

| initial_silence_ms | uint32 | Milliseconds of silence observed before start of utterance. |

| initial_energy | float | Energy feature value of first speech frame. |

| final_energy | float | Energy feature value of last speech frame. |

| mean_energy | float | Average energy feature value of utterance. |

This Dsp example includes the speech signal level:

try:

# Iterate through messages returned from server

for message in stream_in:

if message.HasField('status'):

if message.status.details:

print(f'{message.status.code} {message.status.message} - {message.status.details}')

else:

print(f'{message.status.code} {message.status.message}')

elif message.HasField('result'):

restype = 'partial' if message.result.result_type else 'final'

print(f'{restype}: {message.result.hypotheses[0].formatted_text}')

print(f'Speech signal level: {message.result.utterance_info.dsp.level} SNR: {message.result.utterance_info.dsp.snr_estimate_db}')

This output shows the speech signal level and speech-to-noise ratio for each utterance:

stream ../audio/weather16.wav

100 Continue - recognition started on audio/l16;rate=16000 stream

final: There is more snow coming to the Montreal area in the next few days

Speech signal level: 20993.0 SNR: 15.0

final: We're expecting 10 cm overnight and the winds are blowing hard

Speech signal level: 18433.0 SNR: 15.0

final: Radar and satellite pictures show that we're on the western edge of the storm system as it continues to track further to the east

Speech signal level: 21505.0 SNR: 14.0

stream complete

200 Success

Hypothesis

Output message containing one or more proposed transcripts of the audio stream. Included in Result. Each variation has its own confidence level along with the text in two levels of formatting. See Formatted text.

| Field | Type | Description |

|---|---|---|

| confidence | float | The confidence score for the entire result, 0 to 1. |

| average_confidence | float | The confidence score for the hypothesis, 0 to 1: the average of all word confidence scores based on their duration. |

| rejected | bool | Whether the hypothesis was rejected or accepted.

|

| formatted_text | string | Formatted text of the result, for example, $500. Formatting is controlled by formatting schemes and options. See Formatted text. |

| minimally_formatted_text | string | Slightly formatted text of the result, for example, Five hundred dollars. Words are spelled out, but basic capitalization and punctuation are included. See the formatting scheme, all_as_words. |

| words | repeated Word | One or more recognized words in the result. |

| encrypted_tokenization | string | Nuance-internal representation of the recognition result. Not returned when result originates from a grammar. Activated by RecognitionFlags: include_tokenization. |

| grammar_id | string | Identifier of the matching grammar, as grammar_0, grammar_1, etc. representing the order the grammars were provided as resources. Returned when result originates from an SRGS grammar rather than generic dictation. |

| detected_wakeup_word | string | The detected wakeup word when using a wakeup word resource in RecognitionResource. See Wakeup words. |

| tokenization | repeated Token | Nuance-internal representation of the recognition result in plain form. Not used in Krypton ASR v4. |

This hypothesis example includes formatted_text, confidence, and whether the utterance was rejected (False means it was accepted):

try:

# Iterate through messages returned from server

for message in stream_in:

if message.HasField('status'):

if message.status.details:

print(f'{message.status.code} {message.status.message} - {message.status.details}')

else:

print(f'{message.status.code} {message.status.message}')

elif message.HasField('result'):

restype = 'partial' if message.result.result_type else 'final'

print(f'{restype}: {message.result.hypotheses[0].formatted_text}')

print(f'Average confidence: {message.result.hypotheses[0].average_confidence} Rejected? {message.result.hypotheses[0].rejected}')

This output shows the formatted text lines, including abbreviations such as “10 cm”:

stream ../audio/weather16.wav

100 Continue - recognition started on audio/l16;rate=16000 stream

final: There is more snow coming to the Montreal area in the next few days

Average confidence: 0.4129999876022339 Rejected? False

final: We're expecting 10 cm overnight and the winds are blowing hard

Average confidence: 0.7960000038146973 Rejected? False

final: Radar and satellite pictures show that we're on the western edge of the storm system as it continues to track further to the east

Average confidence: 0.6150000095367432 Rejected? False

stream complete

200 Success

Word

Output message containing one or more recognized words in the hypothesis, including the text, confidence score, and timing information. Included in Hypothesis.

| Field | Type | Description |

|---|---|---|

| text | string | The recognized word. |

| confidence | float | The confidence score of the recognized word, 0 to 1. |

| start_ms | uint32 | Start time of the word. Offset, in milliseconds, from the beginning of the current audio segment (abs_start_ms). |

| end_ms | uint32 | End time of the word. Offset, in milliseconds, from the beginning of the current audio segment (abs_start_ms). |

| silence_after_word_ms | uint32 | The amount of silence, in ms, detected after the word. |

| grammar_rule | string | The grammar rule that recognized the word text. Returned when result originates from an SRGS grammar rather than generic dictation. |

This output example shows the words in an utterance about paying a credit card:

hypotheses {

confidence: 0.8019999861717224

average_confidence: 0.9599999785423279

formatted_text: "Pay my Visa card from my checking account tomorrow for $10"

minimally_formatted_text: "Pay my Visa card from my checking account tomorrow for ten dollars"

words {

text: "Pay"

confidence: 0.8579999804496765

start_ms: 2180

end_ms: 2300

}

words {

text: "my"

confidence: 0.9610000252723694

start_ms: 2300

end_ms: 2600

}

words {

text: "Visa"

confidence: 0.9490000009536743

start_ms: 2600

end_ms: 3040

}

words {

text: "card"

confidence: 0.9679999947547913

start_ms: 3040

end_ms: 3440

}

words {

text: "from"

confidence: 0.9649999737739563

start_ms: 3440

end_ms: 3720

}

words {

text: "my"

confidence: 0.9869999885559082

start_ms: 3720

end_ms: 3840

}

words {

text: "checking"

confidence: 0.9900000095367432

start_ms: 3840

end_ms: 4320

}

words {

text: "account"

confidence: 0.9739999771118164

start_ms: 4320

end_ms: 4780

silence_after_word_ms: 120

}

words {

text: "tomorrow"

confidence: 0.9509999752044678

start_ms: 4900

end_ms: 5320

}

words {

text: "for"

confidence: 0.8980000019073486

start_ms: 5320

end_ms: 5600

}

words {

text: "$10"

confidence: 0.9700000286102295

start_ms: 5600

end_ms: 6360

}

}

Token

Output message containing plain text tokenization information. Included in Hypothesis. Not used in Krypton ASR v4.

DataPack

Output message containing information about the current data pack. Included in Result.

| Field | Type | Description |

|---|---|---|

| language | string | Language of the data pack . |

| topic | string | Topic of the data pack. |

| version | string | Version of the data pack. |

| id | string | Identifier string of the data pack, including nightly update information if a nightly build was loaded. |

This DataPack example uses the dp_displayed flag to include the data pack language and version:

try:

# Iterate through messages returned from server

dp_displayed = False

for message in stream_in:

if message.HasField('status'):

if message.status.details:

print(f'{message.status.code} {message.status.message} - {message.status.details}')

else:

print(f'{message.status.code} {message.status.message}')

elif message.HasField('result'):

restype = 'partial' if message.result.result_type else 'final'

if restype == 'final' and not dp_displayed:

print(f'Data pack: {message.result.data_pack.language} {message.result.data_pack.version}')

dp_displayed = True

print(f'{restype}: {message.result.hypotheses[0].formatted_text}')

The output includes the language and version of the data pack:

stream ../audio/monday_morning_16.wav

100 Continue - recognition started on audio/l16;rate=16000 stream

Data pack: eng-USA 4.2.0

final: It's Monday morning and the sun is shining

final: I'm getting ready to walk to the train and and commute into work

final: I'll catch the 757 train from Cedar Park station

final: It will take me an hour to get into town

stream complete

200 Success

In the full response, the data pack information is returned within each result.

See Results: All fields.

result {

abs_start_ms: 1020

abs_end_ms: 4330

utterance_info {

duration_ms: 3310

dsp {

snr_estimate_db: 39

level: 9217

num_channels: 1

initial_silence_ms: 220

initial_energy: -51.201

final_energy: -76.437

mean_energy: 146.309

}

}

hypotheses {

confidence: 0.788

average_confidence: 0.967

formatted_text: "It\'s Monday morning and the sun is shining."

minimally_formatted_text: "It\'s Monday morning and the sun is shining."

words {

*** Individual words in the hypothesis ***

}

}

data_pack {

language: "eng-USA"

topic: "GEN"

version: "4.12.1"

id: "GMT20231026205000"

}

}

Notification

Output message containing a notification structure. Notifications can be warnings or information about the recognition process or alerts about an upcoming shutdown. Included in Result.

| Field | Type | Description |

|---|---|---|

| code | uint32 | Notification unique code. |

| severity | EnumSeverityType | Severity of the notification. |

| message | nuance.rpc. LocalizedMessage | The notification message in the local language. |

| data | map<string, string> | Map of additional key, value pairs related to the notification. |

In this example, a notification warning is returned because the DLM and its associated compiled wordset were created using different data pack versions.

result: {

result_type: PARTIAL

abs_start_ms: 160

abs_end_ms :3510

hypotheses: [

*** Hypotheses here ***

],

data_pack: {

language: "eng-USA"

topic: "GEN"

version: "4.11.1"

id: "GMT20230830154712"

}

notifications: [

{

code: 1002

severity: SEVERITY_WARNING

message: {

locale: "en-US"

message: "Wordset-pkg should be recompiled."

message_resource_id: 1002

}

data: {

application/x-nuance-wordset-pkg: "urn:nuance-mix:tag:wordset:lang/names-places/places-compiled-ws/eng-USA/mix.asr"

application/x-nuance-domainlm: "urn:nuance-mix:tag:model/names-places/mix.asr?=language=eng-usa"

}

}

]

}

EnumSeverityType

Output field specifying a notification’s severity. Included in Notification.

| Name | Number | Description |

|---|---|---|

| SEVERITY_UNKNOWN | 0 | The notification has an unknown severity. Default. |

| SEVERITY_ERROR | 10 | The notification is an error message. |

| SEVERITY_WARNING | 20 | The notification is a warning message. |

| SEVERITY_INFO | 30 | The notification is an information message. |

Scalar value types

The data types in the proto files are mapped to equivalent types in the generated client stub files.

Feedback

Was this page helpful?

Glad to hear it! Please tell us how we can improve.

Sorry to hear that. Please tell us how we can improve.