Dialog service gRPC API

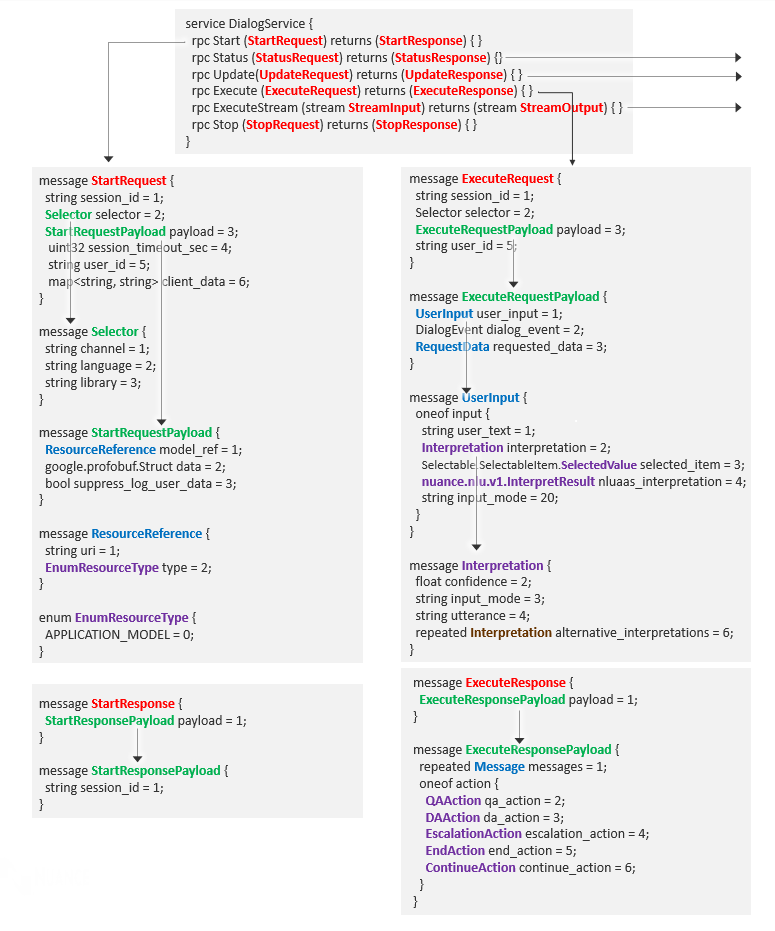

DLGaaS provides three protocol buffer (.proto) files to define the Dialog service for gRPC:

- The dlg_interface.proto file defines the main DialogService interface methods.

- The dlg_messages.proto file defines the request and response messages for the main DialogService methods.

- The dlg_common_messages.proto file defines other objects used in the fields of messages.

Once you have transformed the proto files into functions and classes in your programming language using gRPC tools, you can call these functions from your client application to start a conversation with a user, collect the user’s input, obtain the action to perform, and so on.

See Client app development for a scenario using Python that provides an overview of the different methods and messages used in a sample order coffee application.

For other languages, consult the gRPC and protocol buffer documentation.

Tip:

Try out this API using a Sample Python runtime client.Proto file structure

The proto files define the methods and message types for the API.

DialogService

The Dialog service contains six methods related to starting, executing, managing, and closing a conversation flow or dialog.

| Name | Request Type | Response Type | Description |

|---|---|---|---|

| Start | StartRequest | StartResponse | Starts a conversation. Returns a StartResponse object. |

| Status | StatusRequest | StatusResponse | Returns the status of a session. Returns grpc status 0 (OK) if found, 5 (NOT_FOUND) if no session was found. Returns a StatusResponse object. |

| Update | UpdateRequest | UpdateResponse | Updates the state of a session, for example session variables, without advancing the conversation. Returns an UpdateResponse object. |

| Execute | ExecuteRequest | ExecuteResponse | Used to continuously interact with the conversation based on end user input or events. Returns an ExecuteResponse object that will contain data related to the dialog interactions and that can be used by the client to interact with the end user. |

| ExecuteStream | StreamInput stream | StreamOutput stream | Performs recognition on streamed audio using ASRaaS and provides speech synthesis using TTSaaS. |

| Stop | StopRequest | StopResponse | Ends a conversation and performs cleanup. Returns a StopResponse object. |

StartRequest

Request object used by the Start method.

| Field | Type | Description |

|---|---|---|

| session_id | string | Optional session ID. If not provided then one will be generated. |

| selector | common.Selector | Selector providing the channel and language used for the conversation. |

| payload | common.StartRequestPayload | Payload of the Start request. |

| session_timeout_sec | uint32 | Session idle timeout limit (in seconds), after which the session is terminated. Maximum of 259200 (72 hours). The maximum is configurable in self-hosted deployments. |

| user_id | string | Identifies a specific user within the application. See User ID. |

| client_data | map<string,string> | Map of client-supplied key-value pairs to inject into the call log. Optional. Example: "client_data": { "param1": "value1", "param2": "value2" } |

This method includes:

StartRequest

session_id

selector

channel

language

library

payload

model_ref

uri

type

data

suppress_log_user_data

session_timeout_sec

user_id

client_data

StartResponse

Response object used by the Start method.

| Field | Type | Description |

|---|---|---|

| payload | common.StartResponsePayload | Payload of the Start response. Contains session ID. |

This method includes:

StartResponse

payload

session_id

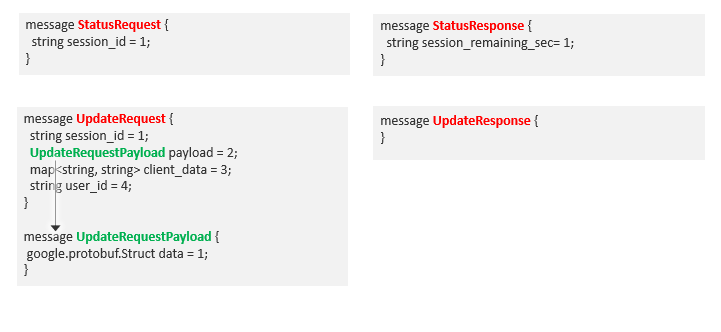

StatusRequest

Request object used by Status method. For more information about the Status method, see Step 5. Check session status.

| Field | Type | Description |

|---|---|---|

| session_id | string | ID for the session. |

This method includes:

StatusRequest

session_id

StatusResponse

Response object used by the Status method.

| Field | Type | Description |

|---|---|---|

| session_remaining_sec | uint32 | Remaining session time to live (TTL) value in seconds, after which the session is terminated. Note: The TTL may be a few seconds off based on how long the round trip of the request took. |

This method includes:

StatusResponse

session_remaining_sec

UpdateRequest

Request object used by the Update method. For more information about the Update method, see Step 6. Update session data.

| Field | Type | Description |

|---|---|---|

| session_id | string | ID for the session. |

| payload | common.UpdateRequestPayload | Payload of the Update request. |

| client_data | map<string,string> | Map of client-supplied key-value pairs to inject into the call log. Optional. Example: "client_data": { "param1": "value1", "param2": "value2" } |

| user_id | string | Identifies a specific user within the application. See User ID. |

This method includes:

UpdateRequest

session_id

payload

client_data

user_id

UpdateResponse

Response object used by the Update method. Currently empty.

This method includes:

UpdateResponse

ExecuteRequest

Request object used by the Execute method.

| Field | Type | Description |

|---|---|---|

| session_id | string | ID for the session. |

| selector | common.Selector | Selector providing the channel and language used for the conversation. |

| payload | common.ExecuteRequestPayload | Payload of the Execute request. |

| user_id | string | Identifies a specific user within the application. See User ID. |

This method includes:

ExecuteRequest

session_id

selector

channel

language

library

payload

user_input

user_text

interpretation

confidence

input_mode

utterance

data

key

value

slot_literals

key

value

slot_formatted_literals

key

value

slot_confidences

key

value

alternative_interpretations

selected_item

id

value

nluaas_interpretation

asraas_result

input_mode

dialog_event

type

message

event_name

requested_data

id

data

user_id

ExecuteResponse

Response object used by the Execute method. This object carries a payload, which instructs the client app to play messages to the user (as needed) and do one of the following:

- Prompt for user input

- Provide requested data

- Fill time and keep user engaged while server side is fetching data

- Transfer or end the conversation

The payload also includes references to ASR, NLU, TTS, and NR resources that can be used to orchestrate externally with these other services rather than having Dialog perform orchestration.

| Field | Type | Description |

|---|---|---|

| payload | common.ExecuteResponsePayload | Payload of the Execute response. |

This method includes:

ExecuteResponse

payload

messages

nlg

text

mask

barge_in_disabled

visual

text

mask

barge_in_disabled

audio

text

uri

mask

barge_in_disabled

view

id

name

language

tts_parameters

voice

channel

qa_action

message

nlg

text

mask

barge_in_disabled

visual

text

mask

barge_in_disabled

audio

text

uri

mask

barge_in_disabled

view

id

name

language

tts_parameters

voice

channel

data

view

id

name

selectable

selectable_items

value

id

value

description

display_text

display_image_uri

recognition_settings

dtmf_mappings

collection_settings

speech_settings

dtmf_settings

input_modes

mask

orchestration_resource_reference

grammar_references

recognition_resources

interpretation_resources

recognition_init_resources

recognition_init_message

recognition_init

dtmf_recognition_init

language

da_action

id

message

nlg

text

mask

barge_in_disabled

visual

text

mask

barge_in_disabled

audio

text

uri

mask

barge_in_disabled

view

id

name

language

tts_parameters

voice

channel

view

id

name

message_settings

delay

minimum

data

escalation_action

message

nlg

text

mask

barge_in_disabled

visual

text

mask

barge_in_disabled

audio

text

uri

mask

barge_in_disabled

view

id

name

language

tts_parameters

voice

channel

view

id

name

data

id

escalation_settings

type

destination

end_action

data

id

continue_action

message

nlg

text

mask

barge_in_disabled

visual

text

mask

barge_in_disabled

audio

text

uri

mask

barge_in_disabled

view

id

name

language

tts_parameters

voice

channel

message_settings

delay

minimum

backend_connection_settings

fetch_timeout

connect_timeout

view

id

name

data

id

channel

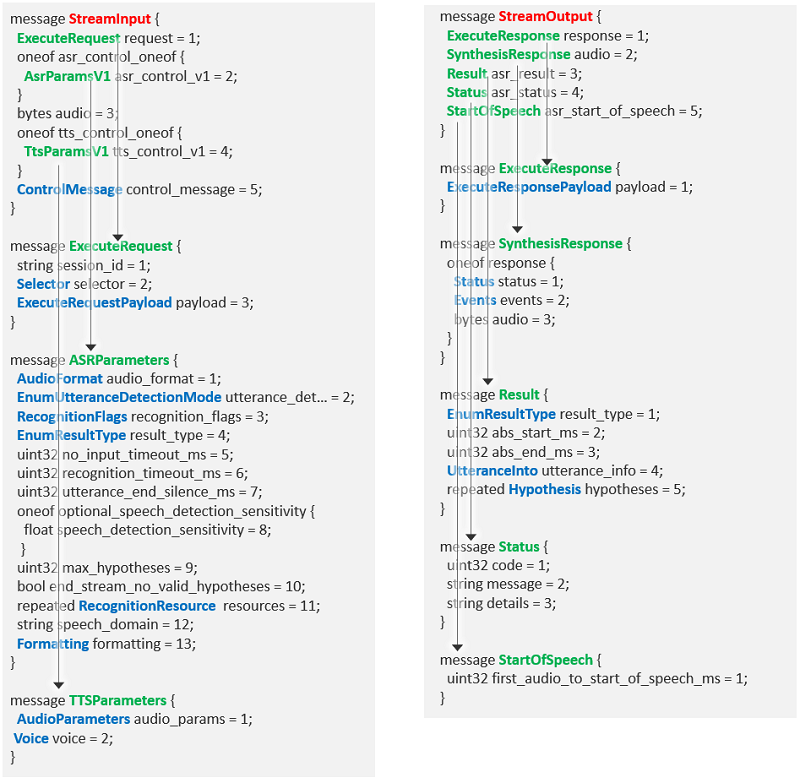

StreamInput

Performs recognition on streamed audio using ASRaaS and requests speech synthesis using TTSaaS.

Warning:

You can send an input audio stream for recognition only if input was requested by the dialog application in a question and answer node. You cannot use the StreamInput method to provide an audio input if the previous response did not include a question and answer node. See Step 4b. Interact with the user using audio for details.The field asr_control_v1 (and control_message if applicable) must be sent as part of the first StreamInput message in order for DLGaaS to chain the audio stream with ASRaaS. Audio is streamed, in order, segment by segment, over the course of the various StreamInput messages.

| Field | Type | Description |

|---|---|---|

| request | ExecuteRequest | Standard DLGaaS ExecuteRequest. Used to continue the dialog interactions. Used on the first StreamInput only. |

| asr_control_v1 | AsrParamsV1 | Defines audio recognition parameters to be forwarded to the ASR service to initiate audio streaming. The contents of this message correspond to those of the recognition_init_message field used in the first message of the ASR input stream. Used on the first StreamInput only. |

| audio | bytes | A segment of the input speech audio in the selected encoding for recognition. |

| tts_control_v1 | TtsParamsv1 | Parameters to be forwarded to the TTS service. |

| control_message | nuance.asr.v1.ControlMessage | Optional input message to be forwarded to the ASR service. This corresponds to the optional control_message field used in the first message of the ASR input stream. ASR uses this message to start the recognition no-input timer if it was disabled by a stall_timers recognition flag in asr_control_v1. See the ASRaaS RecognitionRequest documentation for details. Used on the first StreamInput only. |

This method includes:

StreamInput

request Standard DLGaaS ExecuteRequest

asr_control_v1

audio_format

pcm | alaw | ulaw | opus | ogg_opus

utterance_detection_mode

SINGLE | MULTIPLE | DISABLED

recognition_flags

auto_punctuate

filter_profanity

include_tokenization

stall_timers

etc.

result_type

no_input_timeout_ms

recognition_timeout_ms

utterance_end_silence_ms

speech_detection_sensitivity

max_hypotheses

end_stream_no_valid_hypotheses

resources

speech_domain

formatting

audio

tts_control_v1

audio_params

audio_format

volume_percentage

speaking_rate_percentage

and so on

voice

name

model

and so on

control_message

start_timers_message

StreamOutput

Streams the requested TTS output and returns ASR results.

| Field | Type | Description |

|---|---|---|

| response | ExecuteResponse | Standard DLGaaS ExecuteResponse; used to continue the dialog interactions. Used on the first StreamOutput only. |

| audio | nuance.tts.v1.SynthesisResponse | TTS output. See the TTSaaS SynthesisResponse documentation for details. |

| asr_result | nuance.asr.v1.Result | Output message containing the transcription result, including the result type, the start and end times, metadata about the transcription, and one or more transcription hypotheses. See the ASRaaS Result documentation for details. Used on the first StreamOutput only. |

| asr_status | nuance.asr.v1.Status | Output message indicating the status of the transcription. See the ASRaaS Status documentation for details. Used on the first StreamOutput only. |

| asr_start_of_speech | nuance.asr.v1.StartOfSpeech | Output message containing the start-of-speech message. See the ASRaaS StartOfSpeech documentation for details. Used on the first StreamOutput only. |

This method includes:

StreamOutput

response Standard DLGaaS ExecuteResponse

audio

asr_result

asr_status

asr_start_of_speech

StopRequest

Request object used by Stop method.

| Field | Type | Description |

|---|---|---|

| session_id | string | ID for the session. |

| user_id | string | Identifies a specific user within the application. See User ID. |

This method includes:

StopRequest

session_id

user_id

StopResponse

Response object used by the Stop method. Currently empty; reserved for future use.

This method includes:

StopResponse

Fields reference

The following section contains additional details about the message types of fields used in the request and response messages.

AsrParamsV1

Parameters to be forwarded to the ASR service. See Step 4b. Interact with the user using audio for details.

| Field | Type | Description |

|---|---|---|

| audio_format | nuance.asr.v1.AudioFormat | Audio codec type and sample rate. See the ASRaaS AudioFormat documentation for details. |

| utterance_detection_mode | nuance.asr.v1. EnumUtteranceDetectionMode | How end of utterance is determined. Defaults to SINGLE. See the ASRaaS EnumUtteranceDetectionMode documentation for details. |

| recognition_flags | nuance.asr.v1.RecognitionFlags | Flags to fine tune recognition. See the ASRaaS RecognitionFlags documentation for details. |

| result_type | nuance.asr.v1.EnumResultType | Whether final, partial, or immutable results are returned. See the ASRaaS EnumResultType documentation for details. |

| no_input_timeout_ms | uint32 | Maximum silence, in milliseconds, allowed while waiting for user input after recognition timers are started. Default (0) means server default, usually no timeout. See the ASRaaS Timers documentation for details. |

| recognition_timeout_ms | uint32 | Maximum duration, in milliseconds, of recognition turn. Default (0) means server default, usually no timeout. See the ASRaaS Timers documentation for details. |

| utterance_end_silence_ms | uint32 | Minimum silence, in milliseconds, that determines the end of an utterance. Default (0) means server default, usually 5 or half a second. See the ASRaaS Timers documentation for details. |

| speech_detection_sensitivity | float | A balance between detecting speech and noise (breathing, etc.), from 0 to 1. 0 means ignore all noise, 1 means interpret all noise as speech. Default is 0.5. See the ASRaaS Timers documentation for details. |

| max_hypotheses | uint32 | Maximum number of n-best hypotheses to return. Default (0) means a server default, usually 10 hypotheses. |

| end_stream_no_valid_hypotheses | bool | Determines whether the dialog application or the client application handles the dialog flow when ASRaaS does not return a valid hypothesis. When set to false (default), the dialog flow is determined by the Mix.dialog application, according to the processing defined for the NO_INPUT and NO_MATCH events. To configure the streaming request so that the stream is closed if ASRaaS does not return a valid hypothesis, set to true. See Client handling of ASR no valid hypotheses for details. |

| resources | nuance.asr.v1.RecognitionResource | Repeated. Resources (DLMs, wordsets, builtins) to improve recognition. See the ASRaaS RecognitionResource documentation for details. |

| speech_domain | string | Mapping to internal weight sets for language models in the data pack. Values depend on the data pack. |

| formatting | nuance.asr.v1.Formatting | Specifies how the transcription results are presented, using keywords for formatting schemes and options supported by the data pack. See ASRaaS Formatting for details. |

BackendConnectionSettings

Settings configured for a data access node backend connection.

| Field | Type | Description |

|---|---|---|

| fetch_timeout | string | Number of milliseconds allowed for fetching the data before timing out. |

| connect_timeout | string | Connect timeout in milliseconds. |

ContinueAction

Continue action provides the client application with information useful for handling latency or delays involved with a data access node using a backend data connection. The continue action prompts the client application to respond to initiate the data access. See Continue actions for more detail.

| Field | Type | Description |

|---|---|---|

| message | Message | Latency message to be played to the user while waiting for the backend data access. |

| view | View | View details for this action. |

| data | google.protobuf.Struct | Map of data exchanged in this node. |

| id | string | ID identifying the Continue action node in the dialog application. |

| message_settings | MessageSettings | Settings to be used along with messages returned to the present user. |

| backend_connection_settings | BackendConnectionSettings | Backend settings that will be used by DLGaaS for connecting to and fetching from the backend. |

DAAction

A Data Access action is associated with a Data access node using client-side data access. It provides the client application with data needed to perform the data access as well as a message to play to the user while waiting for the data access to complete. See Data access actions for more detail.

| Field | Type | Description |

|---|---|---|

| id | string | ID identifying the Data Access node in the dialog application. |

| message | Message | Message to be played to the user while waiting for the data access to complete. |

| view | View | View details for this action. |

| data | google.protobuf.Struct | Map of data exchanged in this node. |

| message_settings | MessageSettings | Settings to be used along with messages played to the present user. |

DialogEvent

Message used to indicate an event that occurred during the dialog interactions.

| Field | Type | Description |

|---|---|---|

| type | DialogEvent.EventType | Type of event being triggered. |

| message | string | Optional message providing additional information about the event. |

| event_name | string | Name of custom event. Must be set to the name of the custom event defined in Mix.dialog. See Handling events for details. Applies only when DialogEvent.EventType is set to CUSTOM. |

DialogEvent.EventType

The possible event types that can occur on the client side of interactions.

| Name | Number | Description |

|---|---|---|

| SUCCESS | 0 | Everything went as expected. |

| ERROR | 1 | An unexpected problem occurred. |

| NO_INPUT | 2 | End user has not provided any input. |

| NO_MATCH | 3 | End user provided unrecognizable input. |

| HANGUP | 4 | The end user session has been terminated by the user. This event is used both for IVR (caller hangup) and for digital channels (for example the user disconnecting from a chat session). In Mix.dialog, this event type triggers a UserDisconnect event. |

| CUSTOM | 5 | Custom event. You must set field event_name in DialogEvent to the name of the custom event defined in Mix.dialog. |

EndAction

End node, indicates that the dialog has ended. See End actions for more detail.

| Field | Type | Description |

|---|---|---|

| data | google.protobuf.Struct | Map of data exchanged in this node. |

| id | string | ID identifying the End Action node in the dialog application. |

EscalationAction

Escalation action to be performed by the client application. See Transfer actions for more detail.

| Field | Type | Description |

|---|---|---|

| message | Message | Message to be played as part of the escalation action. |

| view | View | View details for this action. |

| data | google.protobuf.Struct | Map of data exchanged in this node. |

| id | string | ID identifying the External Action node in the dialog application. |

| escalation_settings | EscalationSettings | Settings to configure the esclation transfer. |

EscalationSettings

Settings to configure a transfer of the dialog, for example, to a live agent.

| Field | Type | Description |

|---|---|---|

| type | string | Type of escalation transfer. Values can include “blind” and “route-request”. Empty if not set. |

| destination | string | Optional, provided if a specific transfer type is set. Destination for the transfer, for example, a phone number. |

ExecuteRequestPayload

Payload sent with the Execute request. If both an event and a user input are provided, the event has precedence. For example, if an error event is provided, the input will be ignored.

| Field | Type | Description |

|---|---|---|

| user_input | UserInput | Input provided to the Dialog engine. |

| dialog_event | DialogEvent | Used to pass in events that can drive the flow. Optional; if an event is not passed, the operation is assumed to be successful. |

| requested_data | RequestData | Data that was previously requested by engine. |

ExecuteResponsePayload

Payload returned after the Execute method is called. Specifies the action to be performed by the client application.

| Field | Type | Description |

|---|---|---|

| messages | Message | Repeated. Message action to be performed by the client application. |

| One of: | ||

| qa_action | QAAction | Question and answer action to be performed by the client application. |

| da_action | DAAction | Data access action to be performed by the client application in relation to data access node using client-side data connection. |

| escalation_action | EscalationAction | Escalation action to be performed by the client application. |

| end_action | EndAction | End action to be performed by the client application. |

| continue_action | ContinueAction | Continue action to be performed by the client application in relation to data access node using server-side data connection. |

| channel | string | Active channel for the action. |

Message

Specifies the message to be played to the user. See Message actions for details.

| Field | Type | Description |

|---|---|---|

| nlg | Message.Nlg | Repeated. Text to be played using text to speech. |

| visual | Message.Visual | Repeated. Text to be displayed to the user (for example, in a chat). |

| audio | Message.Audio | Repeated. Prompt to be played from an audio file. |

| view | View | View details for this message. |

| language | string | Message language in xx-XX format. For example, en-US. |

| tts_parameters | TTSParameters | Voice parameters for TTS to be used when TTSaaS orchestrated separately from DLGaaS. |

| channel | string | Active channel for the message. |

Message.Audio

Message audio details.

| Field | Type | Description |

|---|---|---|

| text | string | Text to be used as TTS backup if the audio file cannot be played. |

| uri | string | URI to the audio file, in the following format:<language>/prompts/<library>/<channel>/<filename>?version=<version>For example: en-US/prompts/default/Omni_Channel_VA/Message_ini_01.wav?version=1.0_1602096507331See To provide speech response using recorded audio for more details on how the filename portion is generated. |

| mask | bool | When set to true, indicates that the text contains sensitive data that will be masked in logs. |

| barge_in_disabled | bool | When set to true, indicates that barge-in is disabled. |

Message.TTSParameters

Message TTS parameters.

| Field | Type | Description |

|---|---|---|

| voice | Voice | TTSaaS voice to be used. |

Message.TTSParameters.Voice

Message TTS voice details.

| Field | Type | Description |

|---|---|---|

| name | string | The voice’s name, for example ‘Evan’. Mandatory for SynthesizeRequest. |

| model | string | The voice’s quality model, for example ‘standard’ or ’enhanced’. Mandatory for SynthesizeRequest. |

| gender | EnumGender | Voice gender. Default ANY for SynthesisRequest. |

| language | string | Language associated with the voice in xx-XX format, for example en-US. |

| voice_type | string | TTS voice type, for example ’neural’ or ‘standard’. To identify the TTS Engine to use. |

Message.TTSParameters.Voice.EnumGender

TTSaaS voice gender.

| Name | Number | Description |

|---|---|---|

| ANY | 0 | Any gender voice. Default for SynthesisRequest. |

| MALE | 1 | Male voice. |

| FEMALE | 2 | Female voice. |

| NEUTRAL | 3 | Neutral gender voice. |

Message.Nlg

Text for text to speech.

| Field | Type | Description |

|---|---|---|

| text | string | Text to be played using text to speech. |

| mask | bool | When set to true, indicates that the text contains sensitive data that will be masked in logs. |

| barge_in_disabled | bool | When set to true, indicates that barge-in is disabled. |

Message.Visual

Text to be displayed to the user.

| Field | Type | Description |

|---|---|---|

| text | string | Text to be displayed to the user (for example, in a chat). |

| mask | bool | When set to true, indicates that the text contains sensitive data that will be masked in logs. |

| barge_in_disabled | bool | When set to true, indicates that barge-in is disabled. |

MessageSettings

Settings to be used with latency messages returned by DAAction or ContinueAction.

| Field | Type | Description |

|---|---|---|

| delay | string | Time in milliseconds to wait before presenting user with message. |

| minimum | string | Time in milliseconds to display/play message to user. |

QAAction

Question and answer action to be performed by the client application. See Question and answer actions for more details.

| Field | Type | Description |

|---|---|---|

| message | Message | Message to be played as part of the question and answer action. |

| data | google.protobuf.Struct | Map of data exchanged in this node. |

| view | View | View details for this action. |

| selectable | Selectable | Interactive elements to be displayed by the client app, such as clickable buttons or links. See Interactive elements for details. |

| recognition_settings | RecognitionSettings | Configuration information to be used during recognition when handled externally. |

| mask | bool | When set to true, indicates that the question and answer node is marked on the node level as sensitive, or is meant to collect an entity that will hold sensitive data to be masked in logs. Also true when logs are suppressed globally. |

| orchestration_resource_reference | OrchestrationResourceReference | References to ASR/NLU/NR resources to support external orchestration. |

| recognition_init_resources | RecognitionInitResources | ASR/NR parameters to configure speech or DTMF recognition. Dialog service does not populate this field in responses. |

| language | string | Language expected for user input. |

RecognitionSettings

Configuration information to be used during recognition when handled externally.

| Field | Type | Description |

|---|---|---|

| dtmf_mappings | DtmfMapping | Repeated. DTMF mappings configured in Mix.dialog. |

| collection_settings | CollectionSettings | Collection settings configured in Mix.dialog. |

| speech_settings | SpeechSettings | Speech settings configured in Mix.dialog. |

| dtmf_settings | DtmfSettings | DTMF settings configured in Mix.dialog. |

| input_modes | string | Repeated. Input modes configured in Mix.dialog for the presently selected channel. Possible values are “text”, “interactivity,” “voice,” or “dtmf”. |

RecognitionSettings.CollectionSettings

Collection settings configured in Mix.dialog.

| Field | Type | Description |

|---|---|---|

| timeout | string | Time, in ms, to wait for speech once a prompt has finished playing before throwing a NO_INPUT event. |

| complete_timeout | string | Duration of silence, in milliseconds, to determine the user has finished speaking. The timer starts when the recognizer has a well-formed hypothesis. |

| incomplete_timeout | string | Duration of silence, in milliseconds, to determine the user has finished speaking. The timer starts when the user stops speaking. |

| max_speech_timeout | string | Maximum duration, in milliseconds, of an utterance collected from the user. |

RecognitionSettings.DtmfMapping

DTMF mappings configured in Mix.dialog. See Set DTMF mappings for details.

| Field | Type | Description |

|---|---|---|

| id | string | Name of the entity to which the DTMF mapping applies. |

| value | string | Entity value to map to a DTMF key. |

| dtmf_key | string | DTMF key associated with this entity value. Valid values are: 0-9, *, # |

RecognitionSettings.DtmfSettings

DTMF settings configured in Mix.dialog.

| Field | Type | Description |

|---|---|---|

| inter_digit_timeout | string | Maximum time, in milliseconds, allowed between each DTMF character entered by the user. |

| term_timeout | string | Maximum time, in milliseconds, to wait for an additional DTMF character before terminating the input. |

| term_char | string | Character that terminates a DTMF input. |

RecognitionSettings.SpeechSettings

Speech settings configured in Mix.dialog.

| Field | Type | Description |

|---|---|---|

| sensitivity | string | Level of sensitivity to speech. 1.0 means highly sensitive to quiet input, while 0.0 means least sensitive to noise. |

| barge_in_type | string | Barge-in type; possible values: “speech” (interrupt a prompt by using any word) and “hotword” (interrupt a prompt by using a specific hotword). |

| speed_vs_accuracy | string | Desired balance between speed and accuracy. 0.0 means fastest recognition, while 1.0 means best accuracy. |

RequestData

Data that was requested by the dialog application.

| Field | Type | Description |

|---|---|---|

| id | string | ID used by the dialog application to identify which node requested the data. |

| data | google.protobuf.Struct | Map of keys to json objects of the data requested. |

ResourceReference

Reference object of the resource to use for the request (for example, URN or URL of the model)

| Field | Type | Description |

|---|---|---|

| uri | string | Reference (for example, the URL or URN for the Dialog model). |

| type | ResourceReference. EnumResourceType | Type of resource. |

ResourceReference.EnumResourceType

Resource reference resource type enum.

| Name | Number | Description |

|---|---|---|

| APPLICATION_MODEL | 0 | Dialog application model. |

Selectable

Interactive elements to be displayed by the client app, such as clickable buttons or links. See Interactive elements for details.

| Field | Type | Description |

|---|---|---|

| selectable_items | Selectable.SelectableItem | Repeated. Ordered list of interactive elements. |

Selectable.SelectableItem

Selectable item details.

| Field | Type | Description |

|---|---|---|

| value | Selectable.SelectableItem. SelectedValue | Key-value pair of entity information (name and value) for the interactive element. A selected key-value pair is passed in an ExecuteRequest when the user interacts with the element. |

| description | string | Description of the interactive element. |

| display_text | string | Label to display for this interactive element. |

| display_image_uri | string | URI of image to display for this interactive element. |

Selectable.SelectableItem.SelectedValue

Selectable item value.

| Field | Type | Description |

|---|---|---|

| id | string | Name of the entity being collected. |

| value | string | Entity value corresponding to the interactive element. |

OrchestrationResourceReference

References to orchestration resources for ASR, NLU, and Nuance Recognizer (NR).

| Field | Type | Description |

|---|---|---|

| grammar_references | OrchestrationResourceReference.GrammarResourceReference | Repeated. Nuance Recognizer resource references. |

| recognition_resources | nuance.asr.v1.RecognitionResource | Repeated. ASR resource references. |

| interpretation_resources | nuance.nlu.v1.InterpretationResource | Repeated. NLU resource references. |

OrchestrationResourceReference.GrammarResourceReference

Reference to Nuance Recognizer grammar.

| Field | Type | Description |

|---|---|---|

| uri | string | Reference (for example, the URL or URN). |

| type | OrchestrationResourceReference.GrammarResourceReference.EnumResourceType | Type of resource. |

OrchestrationResourceReference.GrammarResourceReference.EnumResourceType

Nuance Recognizer grammar type.

| Name | Number | Description |

|---|---|---|

| SPEECH_GRAMMAR | 0 | SRGS Grammar for speech(xml/gram/and so on). |

| DTMF_GRAMMAR | 1 | SRGS Grammar for dtmf(xml/gram/and so on). |

RecognitionInitResources

RecognitionInitResources is used to pass ASR/NR parameters to configure speech or DTMF recognition. Dialog service does not populate this message in responses.

| Field | Type | Description |

|---|---|---|

| recognition_init_message | nuance.asr.v1.RecognitionInitMessage | ASR parameters and resources to configure speech recognition. Dialog service does not populate this field in responses. See the RecognitionInitMessage documentation for details. |

| recognition_init | nuance.nrc.v1.RecognitionInit | NR parameters and resources to configure speech recognition. Dialog service does not populate this field in responses. See the RecognitionInit documentation for details. |

| dtmf_recognition_init | nuance.nrc.v1.DTMFRecognitionInit | NR parameters and resources to configure DTMF recognition. Dialog service does not populate this field in responses. See the DTMFRecognitionInit documentation for details. |

Selector

Provides channel and language used for the conversation. See Languages, channels, and modalities for details.

| Field | Type | Description |

|---|---|---|

| channel | string | Optional. Channel that this conversation is going to use (for example, WebVA). Note: Replace any spaces or slashes in the name of the channel with the underscore character (_). |

| language | string | Optional. Language to use for this conversation. This sets the language session variable. The format is xx-XX, for example, “en-US” |

| library | string | Optional. Library to use for this conversation. Advanced customization reserved for future use. Always use the default value for now, which is default. |

StartRequestPayload

Payload sent with the Start request.

| Field | Type | Description |

|---|---|---|

| model_ref | ResourceReference | Reference object for the Dialog model. |

| data | google.protobuf.Struct | Session variables data sent in the request as a map of key-value pairs. |

| suppress_log_user_data | bool | Set to true to disable logging for ASR, NLU, TTS, and Dialog. |

StartResponsePayload

Payload returned after the Start method is called. If a session ID is not provided in the request, a new one is generated and should be used for subsequent calls.

| Field | Type | Description |

|---|---|---|

| session_id | string | Returns session ID to use for subsequent calls. |

UpdateRequestPayload

Payload sent with the Update request.

| Field | Type | Description |

|---|---|---|

| data | google.protobuf.Struct | Map of key-value pairs of session variables to update. |

TtsParamsv1

Parameters to be forwarded to the TTS service. See Step 4b. Interact with the user (using audio) for details.

| Field | Type | Description |

|---|---|---|

| audio_params | nuance.tts.v1. AudioParameters |

Output audio parameters, such as encoding and volume. See the TTSaaS AudioParameters documentation for details. |

| voice | nuance.tts.v1.Voice | The voice to use for audio synthesis. See the TTSaaS Voice documentation for details. |

UserInput

Provides input to the Dialog engine. The client application sends either the text collected from the user, to be interpreted by Mix, or an interpretation that was performed externally.

Note: Provide only one of the following fields: user_text, interpretation, selected_item, nluaas_interpretation, asraas_result.

| Field | Type | Description |

|---|---|---|

| One of: | ||

| user_text | string | Text collected from end user. |

| interpretation | UserInput.Interpretation | Interpretation that was done externally (for example, Nuance Recognizer for VoiceXML). This can be used for simple interpretations that include entities with string values only. Use nluaas_interpretation for interpretations that include complex entities. |

| selected_item | Selectable.SelectableItem. SelectedValue |

Value of element selected by end user. |

| nluaas_interpretation | nuance.nlu.v1.InterpretResult | Interpretation that was done externally (for example, Nuance Recognizer for VoiceXML), provided in the NLUaaS InterpretResult format. See Interpreting text user input for an example. Note that DLGaaS currently only supports single intent interpretations. |

| asraas_result | nuance.asr.v1.Result | Speech recognition that was done externally, provided in the ASRaaS result format. |

| input_mode | string | Optional. Input mode. Used for reporting. Current values are dtmf/voice. Applies to user_text and nluaas_interpretation input only. |

UserInput.Interpretation

Sends interpretation data.

| Field | Type | Description |

|---|---|---|

| confidence | float | Required: Value from 0..1 that indicates the confidence of the interpretation. |

| input_mode | string | Optional. Input mode. Current values are dtmf/voice (but input mode not limited to these). |

| utterance | string | Raw collected text. |

| data | UserInput.Interpretation. DataEntry |

Repeated. Data from the interpretation of intents and entities. For example, INTENT:BILL_PAY or AMOUNT:100. |

| slot_literals | UserInput.Interpretation. SlotLiteralsEntry |

Repeated. Slot literals from the interpretation of the entities. The slot literal provides the exact words used by the user. For example, AMOUNT: One hundred dollars. |

| slot_formatted_literals | UserInput.Interpretation. SlotFormattedLiteralsEntry |

Repeated. Slot formatted literals from the interpretation of the entities. |

| slot_confidences | UserInput.Interpretation. SlotConfidencesEntry |

Repeated. Slot confidences from the interpretation of the entities. |

| alternative_interpretations | UserInput.Interpretation | Repeated. Alternative interpretations possible from the interaction, that is, n-best list. |

UserInput.Interpretation.DataEntry

Data entry from interpretation of user input.

| Field | Type | Description |

|---|---|---|

| key | string | Key of the data. |

| value | string | Value of the data. |

UserInput.Interpretation.SlotConfidencesEntry

Slot confidence entry from interpretation of user input.

| Field | Type | Description |

|---|---|---|

| key | string | Name of the entity. |

| value | float | Value from 0..1 that indicates the confidence of the interpretation for this entity. |

UserInput.Interpretation.SlotLiteralsEntry

Slot literal entry from interpretation of user input.

| Field | Type | Description |

|---|---|---|

| key | string | Name of the entity. |

| value | string | Literal value of the entity. |

UserInput.Interpretation.SlotFormattedLiteralsEntry

Slot formatted literal entry from interpretation of user input.

| Field | Type | Description |

|---|---|---|

| key | string | Name of the entity. |

| value | string | Literal value of the entity. |

View

Specifies view details for this action.

| Field | Type | Description |

|---|---|---|

| id | string | Class or CSS defined for the view details in the node. |

| name | string | Type defined for the view details in the node. |

Scalar value types

The data types in the proto files are mapped to equivalent types in the generated client stub files.

Feedback

Was this page helpful?

Glad to hear it! Please tell us how we can improve.

Sorry to hear that. Please tell us how we can improve.